There is no shortage of pilots and platforms when it comes to Agentic AI in higher education. Predictive systems for enrollment, autonomous nudges in advising, automated grading assistants. These are all AI technologies that promise to support students and staff. But here’s the problem: too often, these systems end up unused, distrusted, or eventually disabled.

Not because the AI is bad. But because the workflow around it doesn’t work.

Faculty don’t see the rationale. Students can’t intervene. Staff don’t know when to step in. The design fails not at the level of technology but at the level of trust, clarity, and continuity.

This moment in edtech doesn’t ask for more intelligence. It asks for more design; design that honors institutional nuance, invites human intervention, and adapts to the messiness of academic life.

The good news? Fixing this doesn’t require better models. It requires better workflows.

This blog discusses four practical design principles for implementing AI in higher education to ensure Agentic AI tools don’t just run, they fit. Fit into academic decision-making. Fit into human routines. Fit into the complex, non-linear lives of students. From transparency and memory to human backup, these are the patterns that drive AI adoption in education without burning out your staff or confusing your students.

1. Design for Transparency at Every Step

If students, faculty, or staff can’t see how an agent made a recommendation—or why—it won’t get used. Trust in Agentic AI doesn’t begin with performance. It begins with legibility.

Academic workflows often involve discretionary logic: Should a student drop a course? Is a regrade justified? Is an application incomplete, or just unconventional? When Agentic AI automates these decisions without surfacing the rationale, they erode trust. Even if the answer is technically right.

Recent data confirms the disconnect. According to the 2025 EDUCUASE AI Landscape Study, fewer than 40% of U.S. institutions had formal AI use policies. Meanwhile, 59% of faculty leaders cited data privacy and transparency as major concerns, as reported by Ellucian. Across the Atlantic, only 44% of UK students felt involved in decisions about their institution’s digital learning tools (Jisc, 2022-23). Even students themselves are unsure: 31% of U.S. undergraduates say they don’t understand when AI is allowed in their coursework (Inside Higher Ed, 2024). That uncertainty reflects a broader lack of transparency in institutional AI usage policies.

Designing for transparency means making each step visible, whether it’s showing a GPA threshold, highlighting the data source behind a flag, or explaining how an admissions agent scored a candidate’s extracurricular profile. Think audit trail meets academic logic. This kind of visibility is the foundation of transparency in AI-driven student support systems, where rationale isn’t hidden in backend logic but shown at the point of decision.

For example, if an advising agent flags a student for dropping below full-time status, the interface should show the GPA impact, credit implications, and any human override options available. Not after the fact but in the moment.

This kind of design is already being implemented in platforms like FD Ryze, which allows faculty to query AI-generated summaries and eligibility flags in natural language, complete with explainable criteria and secure audit trails. The goal isn’t to expose the algorithm; it’s to embed accountability into the everyday, which is essential to building ethical AI in education.

In this industry, opacity isn’t just a UX flaw. It’s an adoption blocker.

2. Don’t Automate What Requires Judgment

Agentic AI is built to act autonomously. But in higher ed, not every action should be taken without pause.

When it comes to workflow automation in education, many institutions rush to build AI driven workflows that actually depend on academic judgment: flagging students for probation, recalculating aid eligibility, or assigning placement levels. The problem isn’t that AI gets it wrong. It’s that it gets it alone.

In contexts shaped by nuance, policy exceptions, and individual histories, design needs to build in trigger points not shortcuts. If a financial aid agent is unsure whether a student qualifies for need-based reconsideration, the right move isn’t to auto-deny. It’s to escalate.

This is the design logic behind integrating AI with human oversight in higher ed workflows—a model that privileges context over blind automation.

The risks are especially high in aid processing. In 2024, technical errors in the new FAFSA system corrupted data for up to 20% of applicants (roughly one million students) delaying awards and triggering widespread confusion and corrections. Additionally, 16% of the 7 million FAFSA forms submitted this year required student-driven fixes due to errors or inconsistencies. When AI workflows make decisions based on flawed inputs and without human checks, the result isn’t efficiency. It’s fallout.

This is where many designs fall apart. They skip over ambiguity rather than respecting it. But ambiguity is not a flaw. It’s a feature of academic life. And when workflows route complex cases to human review, institutions maintain both fairness and trust.

For example, some U.S. universities are redesigning academic standing workflows so that when an agent flags a student for dismissal, it prompts an advisor to review attendance trends, family issues, or health accommodations before any action is taken.

When it comes to Agentic AI in education, real trust—and truly AI-enhanced academic decision making—isn’t built by replacing judgment. It’s built by knowing when to defer to it.

3. Build Feedback Loops That Learn

Most academic AI systems operate in closed loops: data goes in, decisions come out. But the system doesn’t adjust unless a developer steps in. That might work for static dashboards. It doesn’t work for Agentic AI.

Agentic systems need to grow with every interaction. If a student disputes a grade, a faculty member overrides an advising recommendation, or staff revoke a financial aid decision, the system should register that as signal, not an exception.

But design matters. Feedback mechanisms shouldn’t be buried in admin portals or left to surveys. They need to be part of the workflow: “Was this recommendation helpful?” “Reason for override?” “Faculty comments attached. Update logic?”

And faculty want this. According to the 2025 Global AI Faculty Survey, over half (54%) believe current student evaluation methods are no longer adequate in the age of AI, and 13% call for a complete revamp. Without responsive systems that learn from faculty input, the disconnect only widens.

Real feedback is the foundation of sustainable higher ed automation. It isn’t just about fixing errors. It’s about tuning AI capabilities to reflect institutional norms. And when the system reaches its limits? That’s where visible human fallback matters. Prompts like “Need help?” or “Escalate to advisor” shouldn’t feel like afterthoughts. They’re part of feedback and they guide system evolution.

Because feedback loops aren’t just for learning. They’re for trust-building in AI powered education.

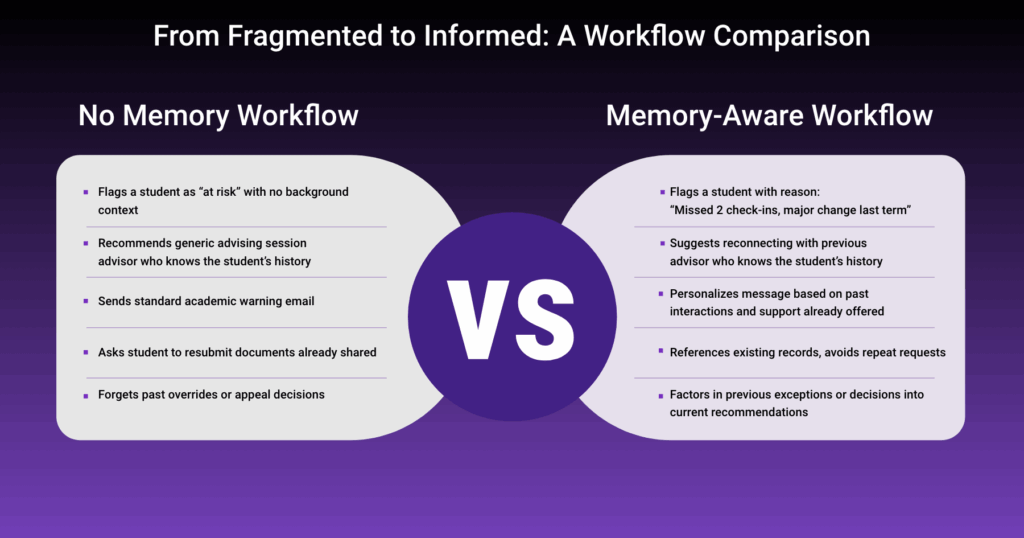

4. Design Memory into the Workflow

Higher ed decisions aren’t made in isolation, and neither should your AI workflows.

A student flagged as “at risk” in their third semester has a story that likely began much earlier. Maybe they switched majors. Maybe they filed three advising requests. Maybe they’re caring for a family member. But most systems treat every workflow as a blank slate. No memory. No continuity. No context.

Agentic AI only works when it remembers what came before.

That doesn’t mean storing every detail. It means building workflows that preserve institutional memory across advising, enrollment, academic standing, and support services. Context like prior overrides, intervention history, or advisor notes should carry forward, not disappear at the next decision point.

UK data reinforces this need. A University College London study analyzing Moodle engagement found that early semester activity is a strong predictor of performance; meaning systems that remember status from week 1 to follow-up actions can prevent disengagement before it becomes irreversible.

Apart from this, designing with memory reduces fatigue: for staff re-entering the same data and for students forced to re-explain themselves. It also plays a critical role in improving student engagement with AI workflows, by making interactions feel relevant, responsive, and connected to each student’s journey. AI becomes a collaborator in continuity. Not just a fragmented tool, but an AI assistant that supports institutional memory.

The Future isn’t Smarter AI. It’s Smarter Design.

The promise of Agentic AI isn’t just automation, it’s alignment. Alignment that drives institutional agility, staff efficiency, and ultimately, improving learning outcomes with AI-powered workflow automation.

That’s why the real challenge is architectural and not merely technical.

If workflows aren’t built to show their thinking, remember what came before, or leave room for human interpretation, the system won’t scale. It’ll stall, no matter how advanced the model.