Why the most competitive enterprises now treat AI inference like mission-critical architecture.

Every time a bank approves a loan in seconds, a fraud alert flashes mid-transaction, or a hospital system flags an early-stage diagnosis, you’re seeing AI inference in action. It’s the point where an algorithm stops studying data and starts acting on it, applying what it learned during training to brand-new, real-world inputs.

This is where AI leaves the lab and enters the economy. It’s also where impact and risk coexist. A model that once lived in spreadsheets now shapes financial access, healthcare decisions, and hiring outcomes. Sometimes within milliseconds.

We see inference as the moment AI proves its worth. Training gives a model potential; inference turns that potential into business results. But that’s also where the stakes rise: biased or unstable outputs do more than just skew accuracy metrics. They affect lives, compliance, and brand trust.

Understanding how inference works, how it fails, and how to govern it responsibly can be the difference between AI that scales and AI that fails spectacularly in production.

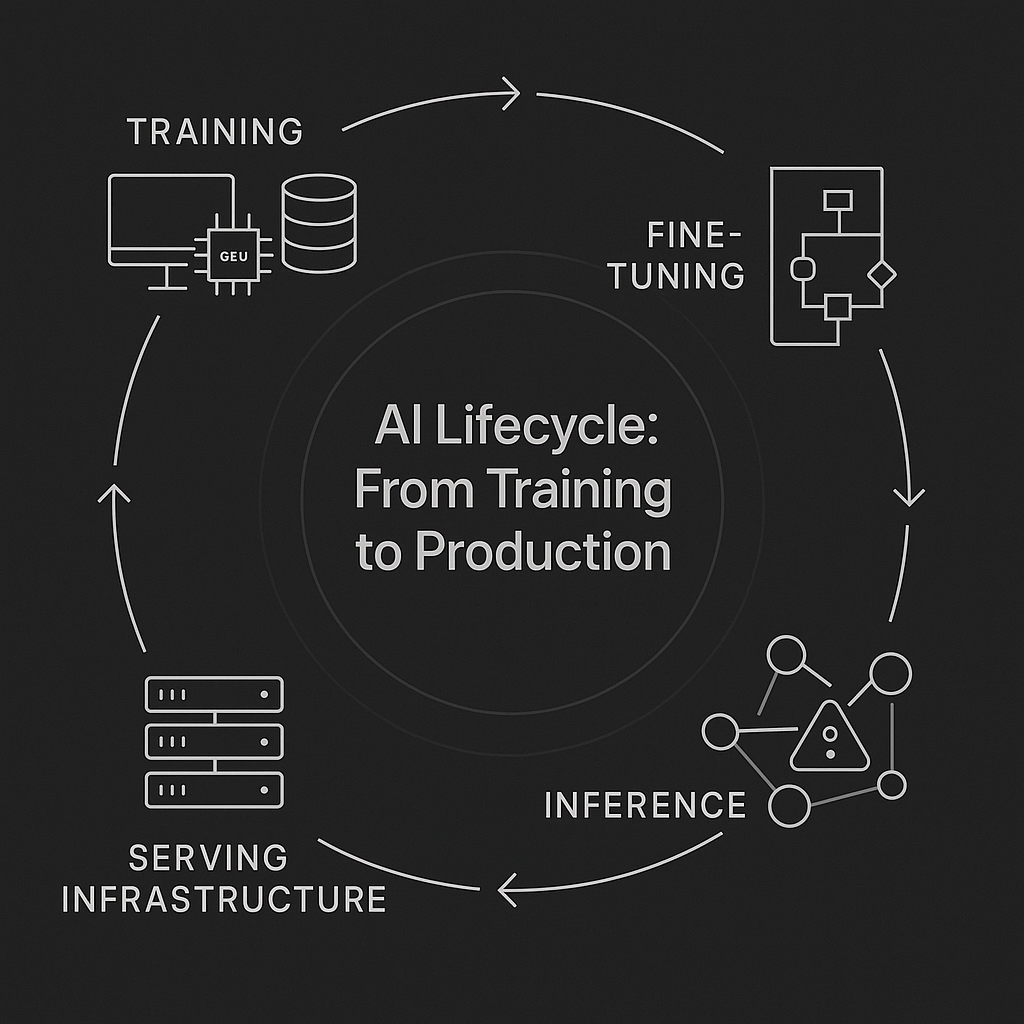

The AI Lifecycle: From Training to Production

AI systems don’t appear fully formed. They evolve through a cycle that begins with training, moves through fine-tuning, and culminates in inference (the stage where models start making real-world decisions). Around this core sits serving infrastructure, the production layer that keeps everything running and learning.

Training builds the foundation. Massive datasets and high-performance GPUs teach a model to recognize patterns: what makes a transaction look fraudulent, how to read sentiment, or how a tumor appears in an image.

Fine-tuning turns that general intelligence into domain expertise. Using smaller, targeted datasets, teams adapt models to their environment: medical terms for healthcare, legal phrasing for contracts, proprietary signals for fraud detection.

Inference is the moment of truth. The model applies what it knows to unseen data, scoring risk, recommending action, or generating insight within milliseconds.

What makes it all possible is the serving environment: managing version control, balancing loads, and monitoring performance. In mature systems, inference doesn’t end the cycle but rather feeds it. Every prediction generates new data that fuels the next round of training.

Inference at Scale: The Operational Reality

Training gets the spotlight, but inference is where the bills pile up. Once a model goes live, it’s making predictions millions of times a day: approving credit lines, analyzing scans, personalizing storefronts. Each decision consumes compute, bandwidth, and time. Over months, those microseconds turn into serious operational cost.

That’s why optimizing inference matters as much as building the model itself. Teams use a toolkit of techniques to keep it fast, efficient, and affordable:

- Quantization lowers numerical precision, from 32-bit floats to 8-bit integers cutting memory and speeding computation. It trades a slight dip in accuracy for up to 3–4x faster performance, vital for edge devices and high-volume APIs.

- Model pruning trims away redundant neurons or layers, often making a model 60 percent smaller while retaining about 98 percent of its accuracy, a huge gain for mobile and embedded deployments.

- Knowledge distillation trains a smaller “student” model to mimic a larger “teacher,” capturing most of its capability while running leaner and cheaper.

- Batching strategies group multiple inference requests together to fully use GPU capacity. It boosts throughput but adds latency, the classic trade-off every engineer negotiates.

- Hardware acceleration, from inference-optimized GPUs to neuromorphic chips, pushes performance further by aligning computer architecture with workload.

How you deploy inference shapes everything; cloud APIs offer flexibility, edge deployments offer speed, and hybrid approaches balance both. The goal is consistent: minimize latency, maximize return. When done right, inference becomes not just faster, but smarter, continuously learning from what it predicts.

Real-World Applications: Where Inference Drives Value

Inference isn’t an abstract process; it’s the machinery behind decisions that now shape industries. Across sectors, it’s what turns trained intelligence into live outcomes, often at speeds that make human review impossible.

Banking: Real-Time Fraud Detection

Every time you tap “pay,” a model is already at work. AI systems analyze transaction features, such as amount, location, merchant, device ID, and timing, in milliseconds to infer risk. IBM notes that these systems recognize subtle patterns that distinguish legitimate behavior from fraud long before manual review could. The challenge is keeping pace with adversaries: as fraud patterns evolve, so must the model. Banks now retrain models weekly or even daily to counter drift.

Healthcare: Predictive Clinical Alerts

Hospitals use inference to turn streaming data into early-warning signals. Mayo Clinic’s TREWS platform identified 82 percent of sepsis cases earlier than conventional methods, cutting treatment delays by nearly two hours. Google’s diagnostic AI infers disease presence from medical imaging, flagging diabetic retinopathy at scale. Each prediction triggers a care workflow that can save lives, but only if models stay calibrated to shifting patient populations and equipment data.

Hiring: AI-Assisted Recruitment

Automation is now the recruiter’s first filter. According to Jobscan’s 2025 ATS Usage Report and B2B Reviews’ Hiring Trends survey, 98.4 percent of Fortune 500 companies use applicant-tracking systems such as Workday, SuccessFactors, or Oracle Taleo. Most of these platforms embed AI-assisted modules that parse resumes, rank candidates, and flag best fits a process. Deloitte estimates are active in roughly 70 percent of large enterprises. It saves time and cost, but it also amplifies whatever bias exists in the training data. Without human oversight and fairness checks, the same efficiency that accelerates hiring can quietly replicate discrimination.

Across all three examples, the lesson is consistent: inference only adds value when its feedback loops are healthy, when each decision feeds learning, governance, and recalibration rather than unchecked automation.

Inference Failure Modes: What Goes Wrong in Production

Even well-trained models falter once they face the real world. These are the failure modes that matter most in production and how mature AI teams contain them:

1. Model Drift: When Reality Shifts

Definition: Data in production drifts from what the model learned. Behavior, markets, or demographics change, and predictions lose accuracy.

Impact: A credit model trained in 2019 failed spectacularly in 2020, when pandemic-era spending and unemployment broke historical patterns.

Response: Track accuracy, recall, and confidence over time. Trigger automated retraining or human review when performance deviates.

2. Bias Amplification: When Inference Discriminates

Definition: Biased data becomes biased decisions at scale.

Impact: Resume screeners favored white-sounding names 85% of the time versus 8.6% for Black-sounding ones. Loan models approved white borrowers 8.5% more often for identical profiles. Such gaps violate GDPR Article 22 and the EU AI Act.

Response: Audit fairness by demographic groups using frameworks like Fairlearn or Aequitas. Apply adversarial debiasing or threshold adjustments, and document fairness metrics in transparency reports.

3. Adversarial & API Attacks: When Inputs Fight Back

Definition: Attackers craft malicious inputs or exploit exposed inference APIs.

Impact: Techniques such as model inversion, membership inference, and prompt injection can extract private data or bypass detection systems.

Response: Combine adversarial training with strong API security: authentication, rate limits, and input validation. Use differential privacy in training and red-team models regularly to expose vulnerabilities.

4. Interpretability Gaps: When We Can’t Explain Why

Definition: Black-box models make accurate predictions without visible reasoning.

Impact: Regulators now demand explainability; “the algorithm said no” is no longer defensible.

Response: Use tools like SHAP, LIME, and counterfactual explanations to surface logic. Log rationales for high-stakes decisions and provide summaries reviewers can understand.

Governance: Making Inference Responsible

Governance is what keeps AI from drifting into danger. Rather than a checklist, it’s a continuous discipline that travels with the model from design to deployment and beyond.

Pre-Deployment: Build for fairness and resilience

Before a model goes live, teams stress-test its logic as rigorously as its accuracy. Bias testing disaggregates performance by demographic groups and checks parity using frameworks like Fairlearn, AI Fairness 360, or the NIST AI RMF. Adversarial robustness exercises push models with edge cases and perturbed data to expose brittle behavior. Risk tiering then classifies each use case by consequence; lending or hiring systems earn tighter controls than recommendation engines. These early safeguards define what “acceptable error” really means before a single prediction reaches production.

Runtime: Monitor, intervene, and contain

Once live, inference becomes an operational heartbeat. Real-time metrics like accuracy, latency percentiles, and error rates act as early-warning sensors. Circuit breakers pause model outputs if confidence plunges or anomaly rates spike. Human-in-the-loop oversight ensures that borderline cases, especially in lending or healthcare, route to expert review instead of blind automation. For us at Fulcrum Digital, governance isn’t bolted on after launch; our AI platform bakes these guardrails into every workflow, flagging drift, alerting operators, and orchestrating retraining when performance slips.

Post-Deployment: Audit, learn, and evolve

Governance doesn’t end at release. In fact, it matures with every cycle. Drift detection monitors input distributions for slow shifts. Quarterly audits validate fairness and robustness, while model cards and decision logs capture lineage for compliance and transparency. Feedback from users, appeals, and real-world corrections flow back into the next training round, turning governance into a learning loop rather than a paperwork exercise.

Together, these three phases form a living control system for AI, one that keeps inference accurate, accountable, and aligned with both regulation and reality.

Next-Generation Inference Challenges

Inference is no longer a single-step prediction. As models grow generative, agentic, and multimodal, the surface area for risk expands right alongside capability. The next era of AI will test how quickly governance can evolve to match the creativity of the systems it oversees.

Generative AI – From Classification to Creation

- Large language and vision models now generate text, code, and imagery, not just labels.

- The risk lies in hallucinations, prompt leakage, and content misuse, where models create confident but false or unsafe outputs.

- Governance shifts to output filtering, contextual prompts, and human review, with red-teaming to simulate adversarial misuse before release.

Agentic Systems – When Decisions Chain Themselves

- Frameworks like AutoGPT and LangChain execute multi-step reasoning autonomously, chaining outputs from one model into another.

- Small inference errors can compound across steps, producing flawed or unsafe outcomes.

- Guardrails now include pipeline monitoring, intermediate checkpoints, and human-in-the-loop validation at key decision nodes.

Multimodal Models – One Model, Many Vulnerabilities

- Systems combining text, vision, and audio expand both capability and exposure.

- Visual perturbations and cross-modal attacks can alter meaning or inject malicious instructions.

- Mitigation relies on modality-specific testing, cross-channel consistency checks, and inclusive datasets that balance representation.

The Reality Check: Regulation and Economics

As AI moves from proof-of-concept to production, two forces decide who scales and who stalls: regulatory scrutiny and economic gravity. Both are reshaping how inference systems are built, governed, and sustained.

Regulatory Pressure: Compliance as Design Principle

Governments aren’t merely policing AI but engineering its boundaries. The EU AI Act, GDPR Article 22, and U.S. sector rules for banking, healthcare, and employment all converge on the same demand: transparency, documentation, and human oversight. Compliance is no longer an external audit; it’s a design philosophy. Model cards, audit logs, and fairness dashboards are becoming part of the software stack itself. When regulation is treated as architecture, not paperwork, it strengthens the system instead of slowing it down.

Economic Pressure: The Hidden Inference Tax

If training builds intelligence, inference pays the energy bill. A large model might cost $10 million to train once but serving a billion monthly inferences at $0.01 each costs $120 million a year. Optimization isn’t a luxury; it’s survival. Techniques such as quantization, pruning, and edge deployment cut costs while reducing latency and carbon load. The smartest cost control is governance itself: models that drift less, fail less, and explain more end up wasting fewer cycles and inviting fewer fines. Efficiency and ethics now run on the same equation.

In the coming phase of AI, competitiveness will hinge on mastering both constraints. The companies that design for compliance and cost will not only scale faster but last longer too.

The Final Test of Intelligence

Inference is where AI stops promising and starts performing.

Every prediction, every decision, is a live test of design on how well your systems learn, adapt, and stay accountable.

The companies moving fastest now aren’t chasing scale for its own sake. They’re building stability into intelligence; models that can explain themselves, recover from drift, and earn trust over time.

That’s how we see AI in production: not as code to be shipped, but as behavior to be managed.

Want to stay ahead?

Sign up for FD // Enterprise Intelligence Newsletter for grounded insights on building AI that works where it matters most.