Large Language Models are AI systems trained on vast data to understand and generate text.

Large Language Models (LLMs) are advanced artificial intelligence (AI) systems trained on massive datasets to perform a wide range of natural language processing (NLP) tasks. They use deep learning, particularly transformer-based architectures, to generate, summarize, classify, and translate human language with high accuracy. These models underpin modern conversational AI and enable scalable automation across industries.

Detailed Definition & Explanation

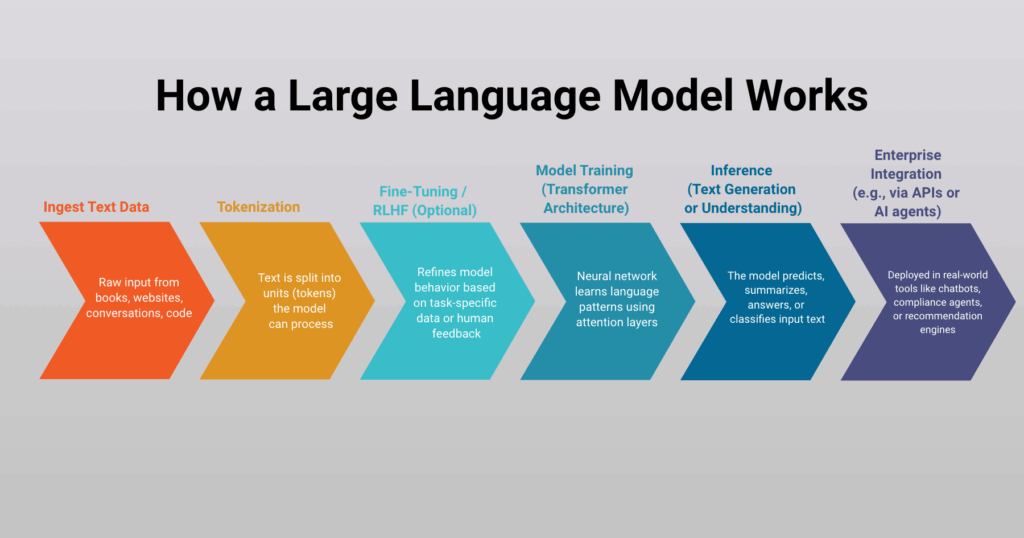

Large Language Models (LLMs) are a subclass of foundation models designed to process, understand, and generate natural language using deep learning techniques. Trained on a vast amount of data, from books and websites to code and conversations, LLMs learn statistical patterns and relationships within human language. They perform a variety of natural language processing tasks such as question answering, summarization, sentiment analysis, and content generation.

At the core of most LLMs is the transformer architecture, which uses self-attention mechanisms to handle sequences of text in parallel rather than sequentially. This enables the model to learn contextual relationships between words, even across long passages. These architectures are composed of encoder and decoder layers or a decoder-only design depending on the use case. By scaling parameters into the billions (or even trillions), these models become capable of generalizing across tasks without task-specific training, a concept known as zero-shot or few-shot learning.

The main types of large language models are:

- Decoder-only (e.g., GPT series): Designed primarily for text generation using autoregressive prediction.

- Encoder-only (e.g., BERT): Optimized for classification and understanding tasks like sentiment or entity detection.

- Encoder–Decoder (e.g., T5, BART): Used for sequence-to-sequence tasks such as summarization and translation.

- Multimodal LLMs: Extend capabilities to images, audio, or video along with text.

- Fine-tuned LLMs: Adapted to specific domains or tasks using reinforcement learning or transfer learning.

These models differ from traditional AI systems in that they can handle specific task learning using a massive amount of data without requiring separate training for each task, especially when coupled with reinforcement learning from human feedback (RLHF) to improve output quality.

In agentic AI systems, LLMs serve as cognitive engines for reasoning, dialogue, summarization, and decision support. Platforms like FD Ryze embed large language models within AI agent workflows to process unstructured content such as claims documents, policy guidance, or user queries. FD Ryze’s orchestration layer controls when and how LLMs are triggered, ensuring responses are not only fluent but compliant, explainable, and governed by enterprise rules.

Why It Matters

Claims Automation and Document Intelligence

In the insurance sector, LLMs enable rapid summarization of lengthy policy documents, incident reports, and claims files. For example, Lemonade uses AI powered by transformer based models to interpret and process claims in minutes, cutting manual review time and fraud risks.

Fraud Detection and Compliance Analysis

Financial institutions leverage LLMs to flag suspicious transaction patterns, decode regulatory language, and summarize legal obligations. JPMorgan Chase developed a custom LLM to scan complex regulatory filings and extract actionable obligations, improving audit readiness and reducing legal costs.

Personalized Marketing at Scale

E-commerce platforms use LLMs to generate real-time, hyper-personalized product descriptions, FAQs, and chatbot replies. Amazon’s Bedrock service enables retailers to build custom LLM-based tools that adapt content based on user behavior and preferences.

Customer Insights and Virtual Assistants

In consumer product companies, LLMs support conversational AI assistants that handle customer service, product guidance, and post-sale engagement. Unilever uses natural language generation tools to surface insights from global consumer reviews and market feedback, fueling R&D decisions.

AI Tutoring and Knowledge Summarization

Educational institutions integrate LLMs into virtual tutors, grading systems, and research tools. Arizona State University, in partnership with OpenAI, uses large language models in ChatGPT to support personalized tutoring, assignment feedback, and accessible learning experiences for students and faculty.

Adoption Trends and Real-World Examples

The journey of large language models began with early artificial neural networks in the 2010s, followed by a transformative shift in 2017 with the introduction of transformer-based models such as Google’s BERT and OpenAI’s GPT. These models scaled up natural language processing and enabled general-purpose understanding and generation. What once required task-specific models are now achievable with a single LLM using a vast amount of data for natural language generation.

According to Kong Research’s 2025 Enterprise LLM Adoption Report, based on a survey of 550 IT leaders, developers, and engineers, 72% of organizations plan to increase their investments in large language models over the next year, with nearly 40% already allocating more than $250,000 USD annually to LLM initiatives. LLMs are emerging not just as productivity enhancers, but as foundational components of enterprise AI strategies. Their ability to adapt to domain-specific tasks without custom pipelines gives them a competitive edge.

OpenAI GPT-4

GPT-4 is a decoder-only LLM built on transformer architecture, enabling advanced text generation, translation, and reasoning. It powers tools like ChatGPT, offering enterprises scalable solutions for content creation, summarization, and interactive AI.

Anthropic Claude

Claude uses a constitutional AI approach to align language model behavior with human values. It is used in financial services and legal tech to generate safe, high-quality summaries of complex documents while adhering to compliance requirements.

Mistral 7B & Mixtral

These open-weight LLMs offer high performance with low compute needs. Enterprises use them to power private AI agents for internal knowledge retrieval, helping maintain confidentiality while reducing API costs.

What Lies Ahead

LLMs Will Become Multimodal and Context-Aware

Enterprises will increasingly adopt models capable of processing not just text, but images, charts, voice, and video. These multimodal LLMs will support advanced use cases like claims adjudication via photo analysis or customer emotion detection from voice input.

Domain-Specific LLMs Will Gain Ground

Companies will fine-tune foundation models to their unique data and tasks, improving relevance and reducing hallucinations. This will be key in industries like healthcare, legal, and finance where accuracy and compliance are paramount. Expect to see more partnerships between AI labs and domain leaders.

LLMs Will Power Autonomous Agents

Rather than acting as static tools, LLMs will be embedded into agentic workflows, deciding when to summarize, when to ask for input, and when to act. This shift will demand agent orchestration layers and real-time usage governance across enterprises.

Cost-Efficiency and Customization Will Drive Open-Source Adoption

As enterprises seek control over data privacy and operational costs, open-source LLMs will become core to in-house AI strategies. Tools like Mixtral and LLaMA 3 will dominate internal-facing use cases, particularly in regulated sectors.

Real-Time Adaptation and Memory Will Be Built In

Future LLMs will remember past interactions, adapt to user preferences, and dynamically update knowledge without full retraining. This will enable long-term customer engagement, learning progress tracking, and evolving policy generation, especially useful in HigherEd and CPS contexts.

Related Terms

- Foundation Models

- Conversational AI

- Natural Language Processing (NLP)

- Transformer Architecture

- Fine-Tuning

- Reinforcement Learning

- Neural Network Architecture