Data protection frameworks are structured systems to secure personal and sensitive data.

Data protection frameworks are structured policies, protocols, and governance models designed to secure sensitive data and ensure compliance with data privacy laws. These frameworks help organizations prevent data breaches, maintain regulatory compliance, and build customer trust by safeguarding personal information collected across systems. In agentic AI contexts, they provide the guardrails for secure and ethical data use.

Detailed Definition & Explanation

A data protection framework is an integrated set of processes, principles, and legal requirements that guide how organizations collect, store, access, and share personal and sensitive data. These frameworks align with global privacy laws such as the General Data Protection Regulation (GDPR), HIPAA, and other region- or sector-specific mandates.

Instead of treating data privacy as an afterthought, modern frameworks embed protection principles at every stage of the data lifecycle. This includes defining and classifying data types (such as personal, sensitive, or regulated), applying encryption and role-based access control, and ensuring compliance workflows are continuously monitored and auditable.

For instance, platforms like FD Ryze implement a dynamic, policy-driven governance layer that enables agentic AI systems to operate within evolving legal boundaries. Rather than merely reacting to compliance violations, FD Ryze preemptively restricts data access and processing based on jurisdiction, user consent, and policy lineage. Its agents are equipped to apply data minimization rules, enforce purpose limitation, and generate real-time audit trails, ensuring not just technical security, but also legal defensibility and operational integrity.

The different types of data protection frameworks include:

- GDPR Compliance Models: Frameworks built around the EU’s General Data Protection Regulation.

- HIPAA-based Systems: U.S. healthcare compliance models for protecting medical data.

- NIST Privacy Frameworks: U.S. federal guidelines aligning cybersecurity with data privacy.

- Custom Enterprise Frameworks: Organization-specific models integrating sectoral and international mandates.

- Cloud-Native Data Governance: Frameworks tailored for SaaS and multi-cloud environments.

In the context of Agentic AI, these frameworks don’t just monitor data, they shape the behavior of the agents themselves, embedding privacy and compliance as functional constraints within autonomous workflows.

Why It Matters

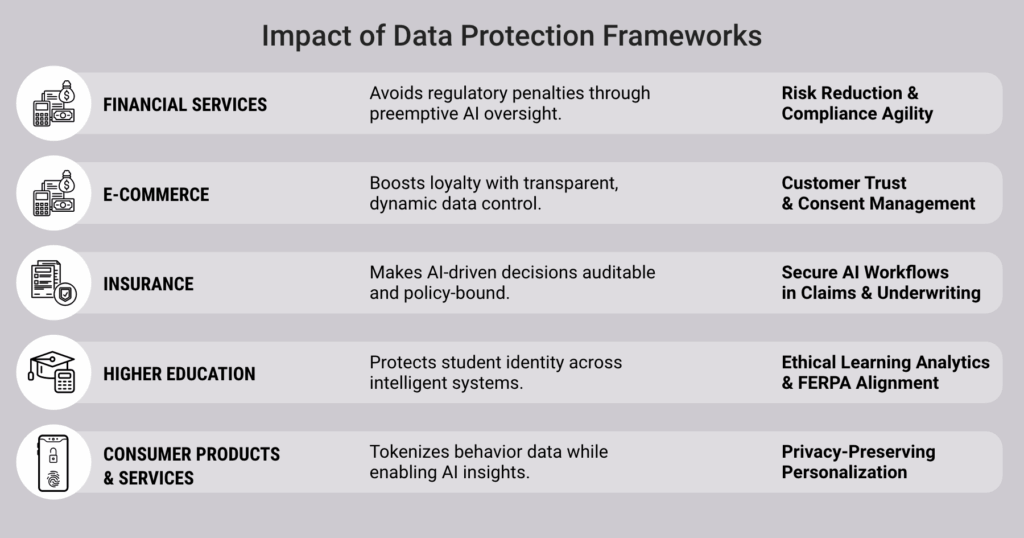

Mitigates Financial and Legal Risk

Robust frameworks reduce exposure to penalties from data breaches or non-compliance with privacy regulations. For example, financial institutions using cloud-based CRMs must meet GDPR and CCPA simultaneously. Citibank’s adoption of automated compliance agents enables preemptive checks against cross-border regulatory violations, strengthening both legal posture and investor trust.

Builds Trust and Brand Integrity

Consumers increasingly expect their personal information to be handled transparently. E-commerce leaders implement frameworks that not only anonymize shopper data but also explain how data is used. Shopify’s privacy engine enables real-time consent updates and data portability, enhancing customer retention and reducing abandonment due to data concerns.

Secures AI-Enabled Claims Workflows

As insurers integrate Agentic AI into claims and underwriting, frameworks ensure that sensitive health, financial, and behavioral data are used ethically and lawfully. AXA’s compliance architecture integrates policy-based access controls into its AI agents, ensuring claims decisions are explainable, auditable, and compliant with evolving data protection laws.

Enables Ethical Data Use in Higher Ed

Universities managing student records, biometric data, and learning analytics face overlapping mandates from FERPA, GDPR, and institutional codes. Frameworks ensure that student data used by AI tutors and learning agents is protected. MIT’s Open Learning platform uses layered governance to restrict AI access to identifiable student data without explicit consent.

Improves Personalization Without Sacrificing Privacy

Consumer products companies rely on behavioral data to power hyper-personalized experiences. With a robust data protection framework, companies like Nestlé can personalize offers through AI agents while ensuring sensitive customer data is tokenized and compliant with privacy laws. This balance fosters competitive advantage and regulatory peace of mind.

Adoption Trends and Real-World Examples

Adopting Artificial Intelligence (AI) is anticipated to increase within compliance departments, with 48% of surveyed professionals believing that AI could enhance internal efficiency. Another 35% identified its potential to help teams stay ahead of regulatory and legislative changes, according to the 2023 Thomson Reuters Risk & Compliance Survey Report. This signals a growing trust in AI not just as an operational tool, but as a strategic layer in navigating evolving compliance landscapes, particularly in the context of dynamic data protection frameworks.

As organizations accelerate cloud adoption and deploy agentic AI systems across regulated workflows, data protection frameworks are no longer optional. They are becoming embedded by design, enabling real-time consent enforcement, jurisdictional filtering, and auditability across industries.

Salesforce Shield

Salesforce offers an enterprise data protection framework with field-level encryption, event monitoring, and automated GDPR controls. It enables businesses to build AI-powered customer journeys while adhering to legal and ethical standards.

Microsoft Priva

Priva provides a compliance-centric framework for managing personal data use across Microsoft 365 environments. It offers data flow mapping, policy alerts, and built-in remediation to support regulatory compliance for AI workflows.

Palantir Foundry

Palantir’s platform integrates granular data governance with operational AI, enabling enterprises to create agent-led workflows that comply with local and international data protection laws. Its “Code-as-Policy” feature automates enforcement of compliance logic in real-time decision-making.

What Lies Ahead

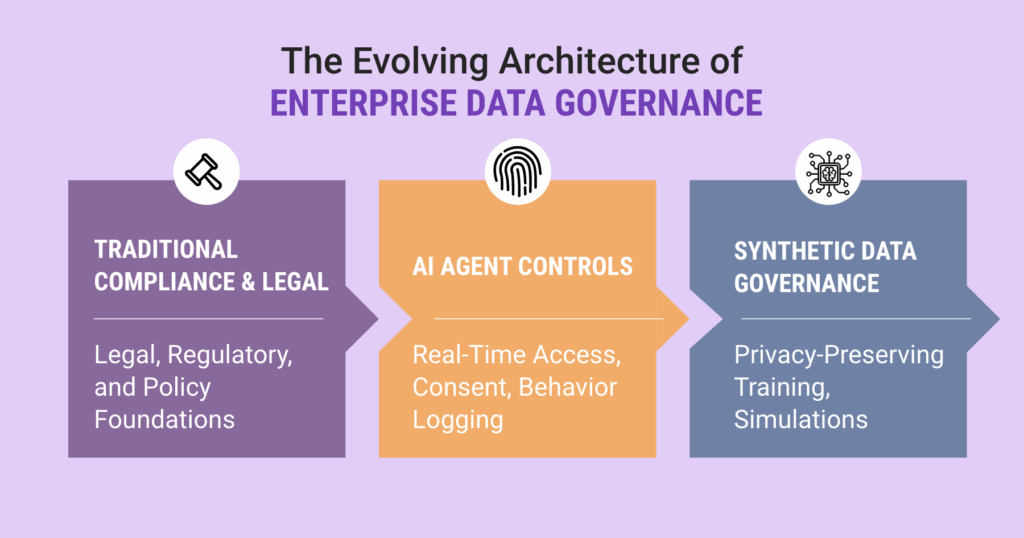

AI-Native Compliance Layers Will Become Standard

As Agentic AI systems gain autonomy, traditional post-processing checks are insufficient. We’ll see compliance logic embedded directly into agents, enabling self-regulating AI that understands jurisdictional boundaries and consent dynamics. Enterprises must plan for AI-native data governance that evolves with changing laws.

Interoperable Frameworks Across Jurisdictions

A growing number of companies will need unified frameworks that handle overlapping regulations (e.g., GDPR, HIPAA, PIPL). This requires machine-readable legal codification and cross-platform compatibility. Organizations should invest in middleware or platforms that translate frameworks into AI-executable formats.

Real-Time Data Rights Management

We’re moving toward systems where individuals can toggle their data rights dynamically, revoking consent, limiting processing, or auditing usage via intuitive dashboards. This will reshape customer experience strategies, especially in CPS and ECom sectors, and require real-time data architecture.

Auditable Agent Behavior Logs

Legal teams will demand verifiable logs of what AI agents saw, processed, and decided, creating a new compliance artifact: the agent audit trail. Solutions that can surface these logs on demand will reduce litigation risk and support ethical AI governance.

Synthetic Data Governance Models

As synthetic data becomes a tool to train AI while preserving privacy, frameworks will need to evolve to certify and regulate this data type. Forward-looking firms will build dual-layered governance models: one for real data, and another for synthetic and generated data sets.

Related Terms

- Data Governance

- Regulatory Compliance

- Access Control

- AI Risk Management

- Sensitive Data

- Agent Audit Trail

- Consent Management Systems