In this blog, we look at what makes AI adoption uniquely challenging in insurance, and how to unlock progress through cultural alignment, not just technical integration.

A 2025 report from Yooz found that 1 in 7 employees outright refuse to use new workplace tools, and 39% consider themselves reluctant adopters. More than half (51%) said technology rollouts often disrupt operations instead of improving them.

Gallup’s 2024 study paints a similar picture: nearly 70% of employees never use AI, and just 10% engage with AI tools on a weekly basis, even though two-thirds believe AI will positively impact their work. Only a third of respondents say their organization has begun integrating AI into daily operations.

In insurance, the numbers tell a similarly conflicted story. According to the State of AI Adoption in Insurance 2025 survey by Roots, over 90% of insurers are actively exploring or piloting AI capabilities, and 82% of executives view AI as a top strategic priority. Yet only 22% have managed to successfully deploy AI into production.

The appetite is there. So is the investment. But the adoption is not. At least not at scale.

In many cases, the technology itself is sound, with promising pilot programs and compelling business cases. But somewhere between strategy and execution, momentum stalls; the rollout goes quiet, and tools sit idle.

What happens next is harder to quantify but deeply felt: adjusters revert to legacy workflows, underwriters second-guess AI-generated scores, and operations teams spend more time managing post-implementation chaos than benefiting from automation. The result isn’t always resistance in the traditional sense; it’s subtler than that. It’s passive non-adoption. Hesitation disguised as pragmatism, shaped by unclear roles, missing context, or tools that feel imposed rather than integrated.

And over time, this silence compounds into something costly: slower returns on innovation, disillusioned teams, and a creeping distrust in the very tools meant to transform the business. These aren’t just signs of technical failure; they’re signals of cultural misalignment.

To make AI work in insurance, we have to start there.

Why This Happens in Insurance

Cultural resistance to AI isn’t unique to insurance; it’s a widespread challenge across industries. Many employees often hesitate to engage with AI not because they reject technology, but because they fear disruption, don’t understand the tools, or haven’t been shown how these systems meaningfully support their work. According to McKinsey, up to 70% of all change programs fail, largely due to employee resistance and lack of leadership support. The anxiety is often practical: the World Economic Forum projects AI and automation will displace 85 million jobs by 2025, even as they create 97 million new ones. That tension, between long-term benefits and short-term uncertainty, shapes how employees react. And when nearly half of those who use AI report receiving no training on how to apply it to their role (Gallup, 2024), hesitation turns into disengagement.

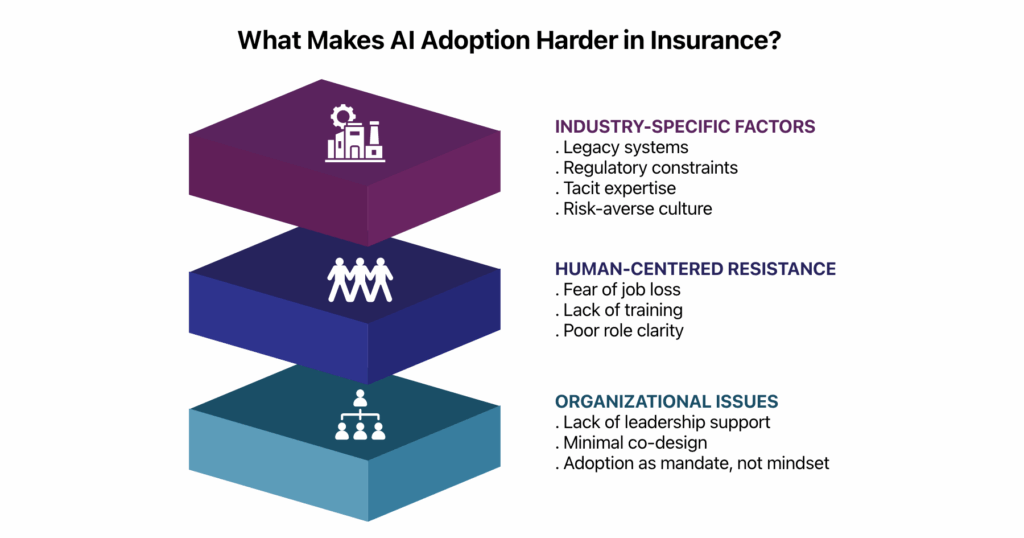

But in insurance, those general challenges are compounded by deep-rooted industry dynamics that make resistance more entrenched and more understandable.

- Legacy systems are a primary obstacle. Many insurers operate on decades-old infrastructure that wasn’t built to support dynamic, AI-enabled workflows. Even when modern tools are introduced, they often need to coexist with inflexible back-end platforms, which leads to patchwork integrations, duplicated effort, and user fatigue.

- Regulatory scrutiny also shapes behavior. Insurance professionals operate in a high-stakes, compliance-driven environment where decisions must be traceable, consistent, and explainable. If AI introduces black-box logic or outputs that can’t be easily justified, teams are likely to default to manual methods, cautious of consequences.

- Tacit expertise is another key factor. Underwriters, claims adjusters, and actuarial teams have built careers on judgment honed through experience; insights that often aren’t formally codified but are central to daily decision-making. When AI is introduced without respecting this embedded knowledge, it can feel reductive or dismissive.

- A deeply risk-averse culture in insurance slows progress. That mindset serves the industry well when assessing liability or pricing coverage, but it also creates inertia when experimenting with unfamiliar technologies. Many teams are more comfortable optimizing what exists than trialing something new, especially when the change feels imposed rather than co-created.

What seem like irrational blockers are, in fact, perfectly rational responses to how AI is often introduced: without transparency, collaboration, or a meaningful link to how insurance professionals actually work.

The Cost of Adoption Without Alignment

When AI fails to take root, the consequences aren’t always dramatic, but they are persistent. In many cases, the organization keeps moving forward, but without momentum or measurable return.

Some of these costs are immediately visible:

- Underutilized investments in platforms, licenses, and pilots that never scale

- Operational inefficiencies as hybrid workflows introduce rework rather than remove it

- Frustrated teams who experience AI as disruption without payoff

- Inconsistent customer experiences as automation delivers mixed results across channels

But the more lasting impact comes when AI is adopted without confronting cultural resistance. When implementation is seen as a tech rollout instead of a change-management initiative, the friction becomes harder to see but more corrosive over time:

- Trust breaks down between leadership and frontline teams who feel sidelined by “top-down tools”

- Talent disengages when employees fear being judged by or replaced by opaque systems

- Innovation stalls, not because of lack of ideas, but because new solutions arrive faster than teams can absorb them

Over time, this creates a perception that transformation efforts are performative, not practical. And once that credibility erodes, it’s harder to rebuild than any technical infrastructure.

Cultural resistance can shape how people interpret every future initiative, and it is vital that organizations build alignment before deployment.

Making AI Stick: What Insurance Teams Actually Need

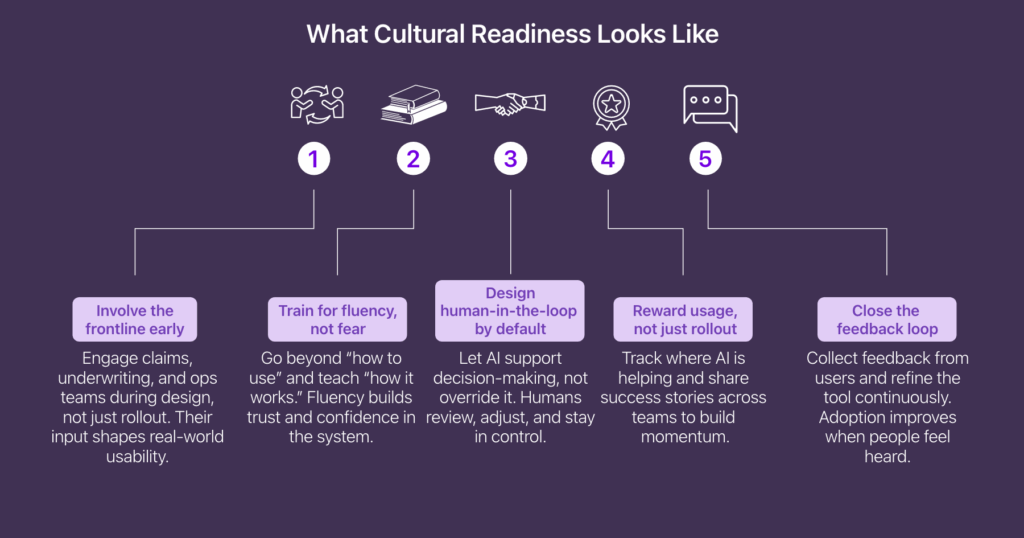

Cultural resistance doesn’t disappear with better UX or more training videos. It fades only when people feel confident, included, and empowered in the change process. For AI adoption to succeed, especially in a domain as trust- and judgment-driven as insurance, it needs to be more than just technically sound; it must be culturally aligned.

At Fulcrum Digital, we’re seeing that alignment emerge by design, not by accident. Rather than introducing agentic AI into the most entrenched workflows from day one, we’re beginning in areas where resistance is naturally lower, like customer onboarding, document summarization, and sales assistance. These are spaces where intelligent recommendations can help streamline front-end experiences without disrupting the deep domain expertise underwriting or claims professionals bring to the table.

Even in these early-stage implementations, the design principle is the same: AI as collaborator, not controller. For example, FD Ryze integrates into the client’s environment in ways that surface insights without taking decision-making away from human experts. Sales agents receive product suggestions, not scripts. Customers get clearer summaries, not one-size-fits-all answers. And crucially, these recommendations are assistive rather than authoritative, keeping people in the loop and in charge.

This measured, intentional path is how trust gets built. By starting where AI adds value without overstepping, and by giving users a say in how these tools evolve, adoption becomes a co-owned process rather than a top-down initiative. And as these systems move into production, the learnings will shape how agentic AI can be introduced more confidently into insurance’s core workflows, on the industry’s terms instead of just technology’s timeline.

For organizations looking to move beyond stalled pilots and toward sustained adoption, here’s where to start:

For AI to truly scale in insurance, adoption must be woven into the cultural fabric of how work gets done. This means engaging teams early, designing for trust at every stage, and building systems where AI doesn’t just coexist with human expertise but actively enhances it.