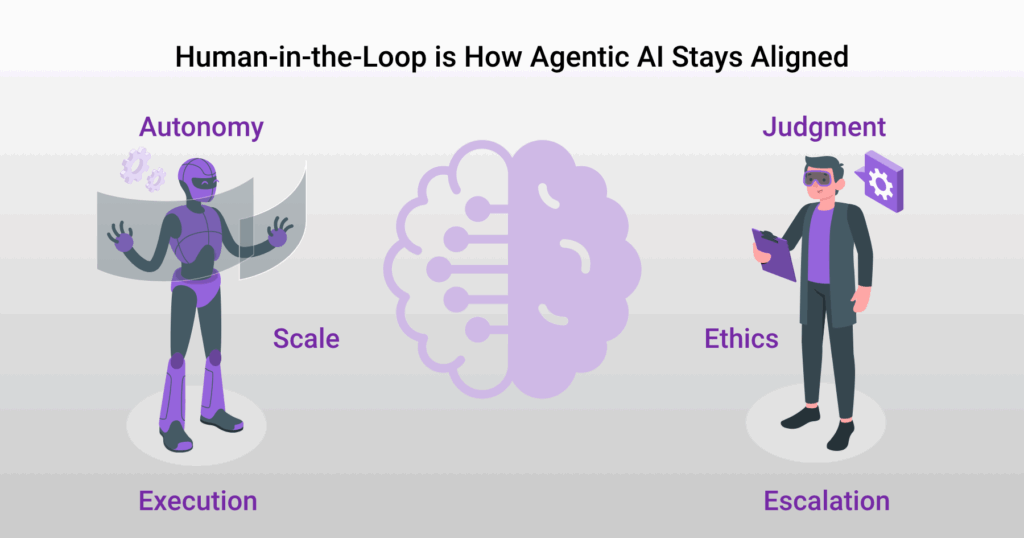

Agentic AI has changed the game in financial services. But that doesn’t mean we bench the humans.

Agentic AI has entered the financial system with the kind of momentum that rewrites workflows overnight. What began as automation has quickly evolved into autonomy: fraud detection engines that trigger account freezes, credit models that make lending decisions in seconds, and onboarding agents that flag risk faster than most teams can react.

These are more than just predictive models. They are autonomous agents, goal-oriented systems capable of initiating actions across AI workflows. But with this shift comes a more inconvenient truth: autonomy isn’t an achievement, it’s a liability. An AI risk that scales faster than most safeguards, unless human judgment stays in the loop.

For all the talk of scale and speed, financial services doesn’t reward recklessness. It rewards resilience. When billions move in milliseconds and the cost of a false positive isn’t just lost revenue but reputational damage, oversight isn’t overhead, it’s insurance.

Human-in-the-Loop isn’t a relic of old systems. It’s how forward-looking institutions prevent black-box decisioning from becoming a systemic vulnerability. Because in finance, the greatest risk doesn’t come from moving too slowly. It comes from delegating too much, too soon, to something you can’t fully see.

Human in the Loop, or HITL, refers to systems where automated decisions are reviewed or escalated to humans at critical junctures. In financial services, this often includes fraud detection, credit scoring, anti-money laundering (AML), onboarding (KYC), algorithmic trading, and insurance claims processing—places where the cost of an unchecked error isn’t just technical, but financial, ethical, and reputational.

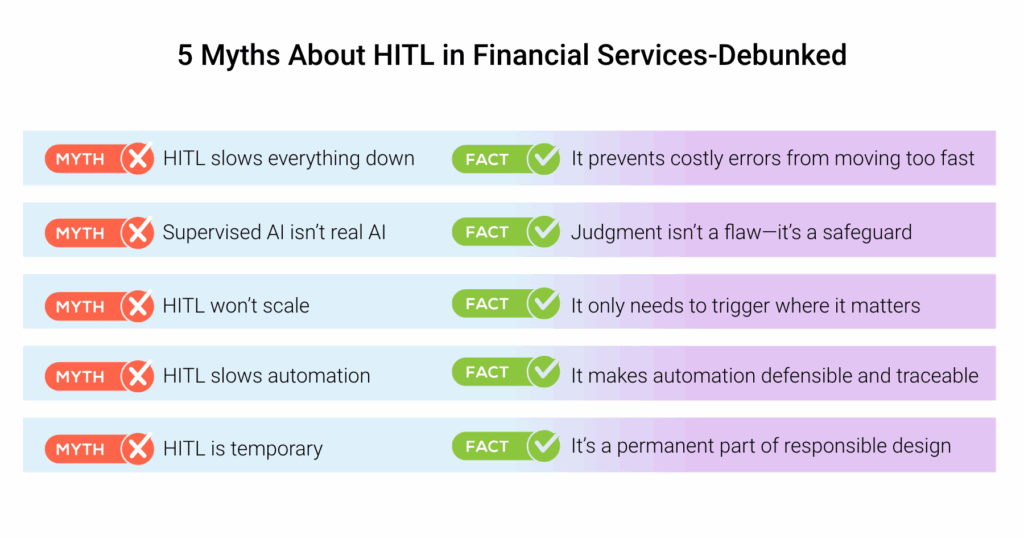

Still, for all its necessity, Human-in-the-Loop has a PR problem. Somewhere between the push for automation and the obsession with scale, HITL got branded as a bottleneck; legacy thinking in a future-facing world.

It’s time to challenge that mythology.

Myth #1: HITL Slows Everything Down

Misconception: Speed Over Risk

In an industry built on throughput, the allure of artificial intelligence (AI) is speed. Faster approvals, faster fraud detection, faster customer service and onboarding. But the more autonomous the system is, the more critical it becomes to get things right. Because mistakes aren’t just flagged, they’re acted upon. And in financial services, speed without friction isn’t always a win. Especially when the cost of getting it wrong is measured in regulatory penalties and reputational collapse.

Take KYC and AML processes. Agentic systems can now freeze accounts or flag suspicious activity without delay. But the very speed that makes them valuable is also what makes oversight essential. Manual review, often criticized as a drag on onboarding timelines, is the same friction that stops sanctioned individuals, shell entities, or synthetic IDs from slipping through. In 2023 alone, banks globally paid over $5 billion in AML-related fines, many tied not to system failure, but to breakdowns in human diligence and oversight.

AI tools can flag anomalies. But it’s human analysts who determine whether those anomalies are false positives or systemic threats. When the stakes involve license revocation, billion-dollar fines, or failing compliance requirements, a few extra minutes (or hours) aren’t inefficiency. They’re the cost of staying compliant.

Myth #2: If It Needs Supervision, It’s Not Real AI

Misconception: Autonomous Means Unsupervised

One of the most persistent illusions when it comes to AI solutions for finance is that the more powerful the system, the less it should need human involvement. But machine learning, no matter how advanced, doesn’t eliminate judgment, it demands alignment.

Agentic systems can recommend credit limits, flag fraud patterns, and even trigger trading actions based on real-time signals. But none of that absolves institutions from accountability. In fact, when an autonomous system makes a decision that affects someone’s financial future, whether it’s declining a mortgage or freezing a transaction, someone still has to answer for that call.

The UK’s Financial Conduct Authority, for example, has repeatedly emphasized the importance of explainability in AI-driven decisions, particularly in credit and lending contexts. The message is clear: If a customer challenges a decision, “the AI said so” doesn’t hold up in court or with regulators.

Agentic AI isn’t diminished by human supervision. It’s anchored by it.

Myth #3: HITL Won’t Scale

Misconception: People Are the Bottleneck

In financial services, scale is a sacred word. Institutions process thousands of transactions per second, monitor vast portfolios, and manage millions of customer interactions daily. So when “Human-in-the-Loop” lands in that environment, skeptics scoff: surely humans can’t keep up.

But that’s not how agentic systems are designed.

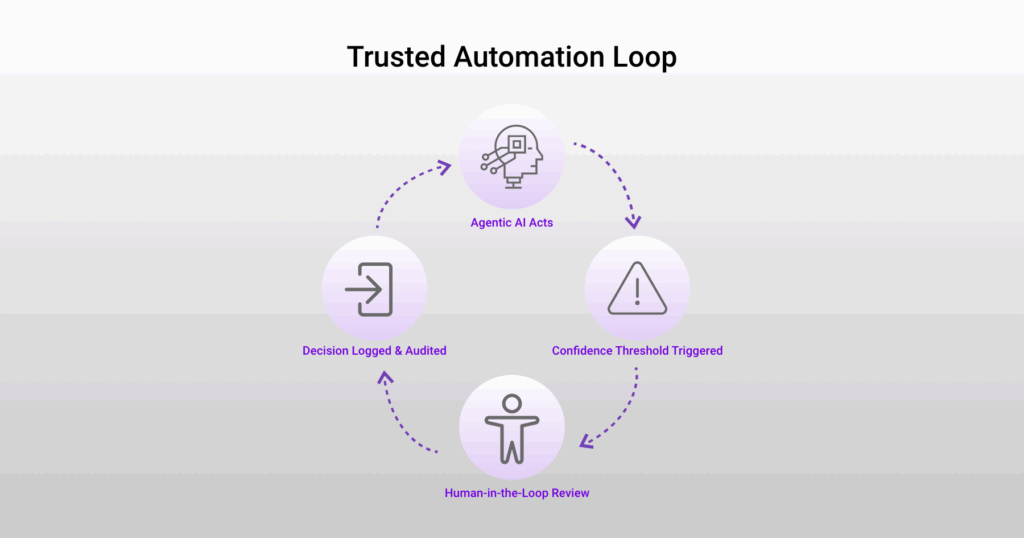

Modern architectures don’t ask humans to oversee everything. They’re built to trigger human intervention in financial AI workflows only where it matters most: in edge cases, escalations, or ethical gray zones. Think of HITL as a tripwire: embedded judgment only triggers when automated confidence falls below a safe threshold.

Take the recent CFPB lawsuit against Zelle’s bank owners—Bank of America, JPMorgan Chase, Wells Fargo—for failing to protect consumers from widespread fraud. The network lost an estimated $870 million over seven years, largely due to insufficient fraud safeguards and delayed human review.

Agentic AI can detect and even block suspicious transactions in milliseconds. But resolving the fallout, approving a refund, restoring trust, updating high risk profiles, still requires human analysts. Sometimes scaled down, not scaled up. Agents narrow the field; humans handle the nuanced finish.

Fulcrum Digital’s agentic AI platform FD Ryze has been helping financial institutions do just that. Deploying fraud detection agents that flag anomalies in real time. Auto-triaging threats while preserving space for human judgment where ambiguity remains. It’s not automation or oversight. It’s both, by design.

Myth #4: It Undermines Automation

Misconception: Trust Slows Tech Down

There’s this belief, especially in tech-forward boardrooms, that Human-in-the-Loop is an insult to AI innovation. That if an agent needs supervision, it’s not really automating anything. But that logic only holds if automated processes are measured by volume, not by defensibility.

In financial services, explainability isn’t a bonus feature. It’s a requirement. Regulators don’t just want to know that a decision was made; they want to know how, why, and what guardrails were in place when it happened. Agentic AI systems that operate without embedded oversight can create what auditors call a black-box liability: decisions that can’t be traced, justified, or responsibly challenged.

It’s one thing when a system recommends a trade. It’s another when it executes one. And when that execution goes wrong, due to drift, bias, or a malformed input, someone still has to answer for it.

That’s why institutions are shifting from post-hoc monitoring to embedded governance, designing AI agents with explainability, escalation protocols, and audit checkpoints from day one. HITL doesn’t undermine automation. It protects it. It gives it a memory. A signature. A spine.

Because when the automation is real, so is the accountability.

Myth #5: It’s Just Temporary

Misconception: HITL Is a Crutch, Not a Design Choice

There’s a common narrative in AI roadmaps: that human oversight is a short-term necessity, a scaffolding we’ll eventually outgrow. But that assumes financial systems will ever operate in a world free of edge cases, ethical trade-offs, or ambiguity.

They won’t.

Agentic AI doesn’t just operate on data; it operates in context, and context evolves. Without human domain knowledge, even the best-trained models will misread the moment. A flagged transaction might be fraud today, and a routine payment tomorrow. A rejected loan application could be legally sound, but reputationally risky. These aren’t problems you automate away. They’re problems you design around, again and again.

In fields like anti-discrimination compliance, institutions are already required to audit model outputs for fairness. In credit scoring, regulators expect clear reasoning, especially when AI is involved. And in risk underwriting, edge cases are where the actual risk lives. Removing HITL from these workflows doesn’t future-proof them. It exposes them.

The best financial institutions know this. They aren’t trying to delete the human. They’re building systems that invite the human back in—at the right moment, with the right authority, and the right context.

Human-in-the-Loop isn’t a temporary compromise. It’s what makes long-term autonomy possible.

The true risk in financial services isn’t letting humans slow the system down; it’s letting machines speed past the point of accountability. The real mistake is thinking oversight is optional. The real opportunity? Designing agentic systems that scale with trust built in; systems where speed, compliance, and human judgment aren’t at odds. They’re architected to work together. That’s not a bottleneck. It’s the future of financial infrastructure.