If AI is in the system, integrity must be in the architecture.

Across college campuses, a theater of contradiction is playing out. Students are warned, surveilled, and penalized for using artificial intelligence in their coursework. Yet the same institutions sounding the alarm are quietly embedding AI into their own operations, screening applicants, forecasting dropouts, shaping curricula. The message is clear, if unspoken: we may use the machine, but you may not.

This isn’t a policy gap. It’s a credibility crisis.

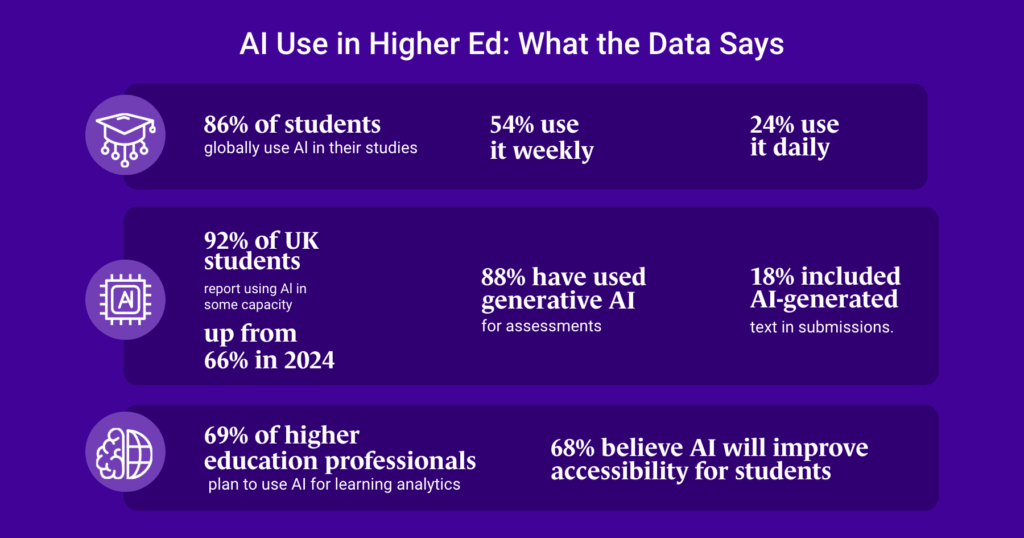

Higher education has long prided itself on cultivating independent thought and moral clarity. But in its response to AI, it increasingly models the opposite: ambiguity, inconsistency, selective enforcement. While universities adopt AI-powered systems to identify at-risk students or streamline grading, students are being disciplined for using similar tools to organize or draft their own work. In a 2024 global survey by the Digital Education Council, over 85% of students reported using AI in some form for academic support; nearly one in four said they use it daily. The lines are not just blurry. They’re broken.

To be clear, this is not a call to flood classrooms with AI. It is a call for intellectual coherence. Agentic AI (systems that act with autonomy to assist, adapt, or decide) is no longer hypothetical. It is embedded deep in the infrastructure of higher education. And if it’s here, integrity can’t be a one-sided virtue. It must apply to the systems that govern students, not just to the students themselves.

The Double Standard We Don’t Acknowledge

In May 2025, a Maryland high school student was finally cleared of an academic integrity violation, nearly a year after being accused of using AI to cheat. The evidence? A dubious reading from an AI-detection tool. The result? Months of academic limbo and reputational damage before the case was quietly dropped.

At Northeastern University, a student learned her professor had used ChatGPT to generate lecture materials despite explicitly banning AI use in the classroom. When she requested a tuition refund, the university declined. The professor faced no formal consequence.

These cases aren’t anomalies. They’re symptoms of a system that disciplines students based on speculative software, while faculty and institutions adopt AI behind a curtain of discretion. According to the University of Pittsburgh’s Teaching Center, AI-detection tools like Turnitin’s are so unreliable that the university disabled them altogether in June 2023, just two months after the AI detector was introduced.

And yet, such tools still shape student outcomes across campuses.

This isn’t about endorsing or curbing AI use. It’s about acknowledging the ethical asymmetry in how it’s governed. When only one side of the system is held to account, we don’t preserve integrity, we perform it. And students, more observant than they’re often credited for, take note.

Integrity can’t be a one-way mirror. If we’re serious about preserving it, we need to start applying it everywhere it matters, especially where no one is watching.

Integrity as Design, Not Detection

For years, academic integrity has functioned as a checkpoint. A rule enforced after the fact, often with punitive consequences. But in an era where intelligent systems influence student outcomes long before a paper is submitted or a test is taken, integrity must evolve from reactive policing to intentional design.

What would it mean to design for integrity, rather than chase its violations?

It would mean transparency by default. If an institution uses agentic AI to flag at-risk students or steer interventions, it should explain how and why. If faculty rely on AI-generated teaching materials, that too deserves daylight. Not to invite critique, but to model the very honesty we demand of students.

It would also mean embedding trust into the feedback loop itself. Rather than catch and punish after submission, systems could be configured to surface ethical risks early, offering guidance, not just detection. According to EDUCAUSE, nearly 70% of higher ed professionals anticipate using AI for learning analytics. The question isn’t whether these systems will shape behavior. It’s whether they’ll do so ethically.

This is not a call to automate morality. It’s a call to embed integrity into the architecture of how decisions are made. Agentic AI, if handled with clarity and care, can help build systems that are accountable, participatory, and aligned, not just efficient.

If higher education wants to preserve trust in the age of intelligent systems, it must stop treating integrity as a finish line. It’s the blueprint.

What Agentic AI Makes Possible

Agentic AI is not arriving at the gates of higher education. It’s already inside.

To understand its promise in the field of learning, we have to look beyond term papers and plagiarism reports. These systems were never meant to write for students. Their real power lies in how they work with institutions: quietly, continuously, and often where human attention fades.

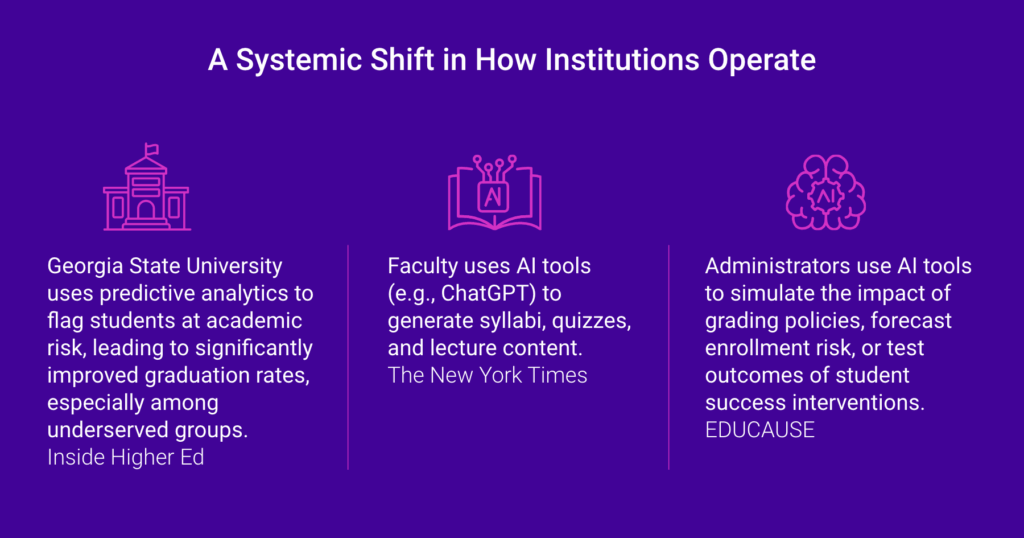

Imagine a system that senses when a first-generation student starts to disengage; not to punish, but to prompt meaningful outreach. Or an advising tool that learns a student’s academic rhythms and suggests pathways tailored to both their ambitions and constraints. These aren’t hypotheticals. At Georgia State University, predictive analytics systems have already improved graduation rates among underserved students by flagging trouble signs early. That’s what agentic systems can do: interpret data, take initiative, and adapt over time.

For faculty, they can surface patterns that identify conceptual struggle, not just late submissions. For administrators, they can model the downstream impact of policy decisions, or simulate whether a new grading framework might close, or widen, equity gaps. And for students, when introduced with care, they can offer scaffolding, feedback, and guidance without bias, without judgment, and without waiting for office hours.

Agentic AI is not a substitute for academic values. But it may be the most powerful tool we’ve had in decades to actually uphold them, at scale and across systems too large or too brittle for human vigilance alone.

Rebuilding Trust Isn’t Optional. It’s the Work.

Academic integrity has never been a static code. It’s a living agreement between learners and institutions, individuals and systems that must evolve alongside the technologies now mediating that relationship. To pretend otherwise is to ignore the reality already underway.

If integrity is to mean anything now, it must apply not just to student authorship, but to institutional authorship as well. Who built the system? What assumptions shaped its logic? Where does human judgment begin and where does it defer?

Through FD Ryze, we’ve seen firsthand how institutions are beginning to confront these questions. Not only through policy, but through the architecture of agentic systems designed to reflect clarity, equity, and trust. The answers are still emerging. But the willingness to ask—openly, systemically, without deflection—is where credibility begins.

The future of education isn’t threatened by intelligent tools. It’s threatened by a failure to match them with intelligent principles.

And that’s not a footnote to the academic mission. That’s the test of whether it still has one.