327% projected increase in agentic AI adoption over the next two years. (Salesforce, 2025)

30% anticipated productivity gains from AI agents. (Medium, 2025)

68% of customer service interactions likely to be handled by agentic AI by 2028. (Cisco, 2025)

96% of enterprises expanding their use of AI agents. (Cloudera, 2025)

33% of enterprise software applications to include agentic AI by 2028. (Gartner, 2025)

These aren’t speculative figures. They’re current projections from leading industry research. Agentic AI isn’t a distant future concept; it’s rapidly becoming an integral part of today’s enterprise landscape. As autonomous AI agents begin to collaborate with human teams, redefining workflows and decision-making processes, the question isn’t whether to adopt this technology, but how to integrate it effectively and ethically. This piece explores the multifaceted implications of embracing agentic AI as a teammate and collaborator in the modern workforce.

Morale

Agentic AI doesn’t wait to be told what to do. It initiates and completes tasks across tools, systems, and workflows without prompts, without check-ins, and often without visibility. That’s part of its value. But it’s also part of the tension. When a teammate isn’t human, but still delivers outcomes before the day starts, it quietly redefines what effort looks like. And when effort becomes invisible, morale becomes fragile.

Integrated thoughtfully, agentic AI can become a morale booster, not a morale killer. As HR Executive reports, early enterprise deployments show agents autonomously handling end-to-end admin flows—assembling meeting briefs, updating CRMs, logging action items—without requiring constant configuration or oversight. Employees reclaim time for more cognitively rewarding work, while still owning the moments that require judgment, empathy, and relationship-building.

Productivity

Agentic AI is often introduced under the banner of productivity. But not all productivity gains are created equal. When an autonomous agent triages incoming requests, routes approvals across departments, or reprioritizes tasks based on changing business logic, yes, output increases. But so does the complexity of tracking where work originates, who touched what, and whether human attention is still required. Efficiency without clarity can breed chaos.

The real productivity unlock comes not from speed alone, but from intelligent orchestration. According to Deloitte, early adopters of agentic platforms like Zora are seeing agents handle multi-system tasks—such as resolving service requests that span CRM, inventory, and billing systems—without human coordination at each step. This frees teams from micro-managing flows and allows them to focus on customer interaction, exception handling, and strategic execution.

Creativity

There’s a persistent fear that AI will dull creativity. With agentic AI, the concern sharpens: what happens when the system doesn’t just suggest ideas but starts producing first drafts, iterating designs, or proposing campaign strategies without being asked? It can feel like the beginning of creative redundancy. But in reality, it’s the beginning of creative delegation.

Agentic AI isn’t creative, but it empowers those who are. Adobe’s approach is simple: free people from friction so they can focus on what only they can do. Whether it’s Acrobat analyzing sales docs or Photoshop suggesting edits, agentic systems accelerate the start of the creative process: proposing, refining, and executing at your direction. Templates feel dated. These workflows begin with insight, act with context, and give creative professionals back time to create with intent.

Ownership & Accountability

When a teammate takes action without being told, the question isn’t just “What got done?” It’s “Who’s responsible?” Agentic AI systems that reroute workflows, reprioritize escalations, or initiate communications blur traditional lines of accountability. If the outcome is positive, who gets credit? If something breaks, who takes the fall? These aren’t philosophical questions; they’re operational ones.

The challenge is that agentic AI doesn’t just execute tasks. It triggers follow-on actions, often across multiple systems and functions. For instance, ServiceNow has introduced AI agents that autonomously manage IT operations, including alert triage and root cause analysis, pulling real-time data from both ServiceNow and third-party systems to address issues instantly. This level of orchestration saves time but makes it harder to map cause and effect. Accountability can’t be traced through effort logs anymore. It has to be designed into the system.

Leadership

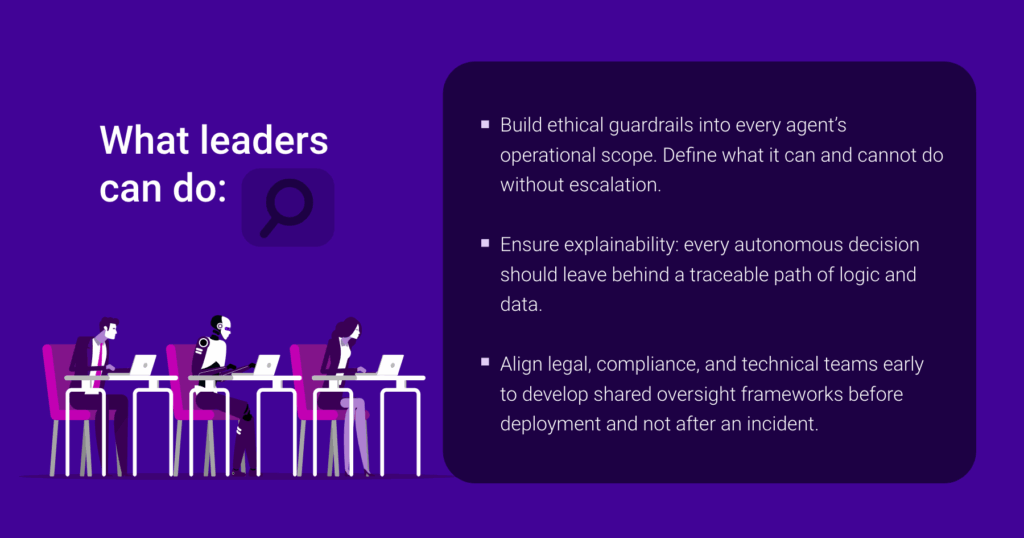

Agentic AI doesn’t just shift how work gets done, it redefines what leadership looks like. In traditional orgs, leaders manage people, tools, and outcomes. But when AI agents start operating across systems autonomously—initiating processes, resolving issues, and escalating edge cases—leaders must evolve from task managers to context setters and orchestration architects.

This isn’t hypothetical. As Harvard Business Review recently noted, leaders in AI-forward organizations are already pivoting toward what they call “collaborative intelligence models,” where their role is to create the conditions for human-agent collaboration, not dictate the execution. That means defining escalation paths, clarifying decision boundaries, and establishing when and where human judgment is required. Leaders don’t need to compete with agents on efficiency. They need to curate the systems in which agents can succeed without eroding trust or clarity.

Culture

Culture isn’t defined by values on a slide. It’s shaped by who (or what) gets to participate in the work. When agentic AI enters the picture, quietly completing tasks, rerouting workflows, and escalating issues without anyone assigning them, it creates a new type of team member: one that doesn’t show up on Zoom, doesn’t join retros, but still moves the work forward. That changes how teams bond, recognize effort, and build shared norms.

As Josh Bersin (global industry analyst and founder of The Josh Bersin Company) points out, many companies are unprepared for the cultural impact of agentic systems. Not because employees fear AI itself, but because they don’t know how to relate to it. Do agents get names? Can you give them feedback? Should their contributions be acknowledged in project recaps? These are questions of culture, not code. And if leaders don’t answer them, teams will fill in the gaps, either with skepticism or silence.

Staffing

Agentic AI doesn’t eliminate headcount. In fact, it reshapes what headcount is for. When autonomous agents handle cross-system coordination, task execution, and real-time decision-making, the value of human staff shifts from throughput to judgment, context, and adaptability. But unless that shift is made explicit, staffing confusion sets in: What roles still matter? Which skills are obsolete? How do you plan headcount around teammates who don’t need PTO?

According to Salesforce‘s recent research, 81% of HR leaders are planning to reskill their employees to be more competitive in a market shaped by AI agents. The study also revealed that CHROs expect to redeploy nearly a quarter of their workforce worldwide as their organizations implement and embrace digital labor. This indicates a significant shift in workforce strategy, focusing on integrating agentic AI alongside human employees to enhance productivity and innovation.

Performance

Agentic AI creates output without needing breaks, recognition, or project plans. But that’s exactly what makes measuring performance more complicated. Traditional KPIs often track effort, time, or activity logs—all metrics that make less sense when autonomous agents are resolving tasks in the background or across systems. If a report is generated, an alert resolved, or a customer journey optimized, who gets credit? And how do you measure the value of invisible work?

To address this, companies are adopting new evaluation frameworks. For instance, Microsoft’s Azure AI Evaluation library introduces metrics such as Task Adherence, Tool Call Accuracy, and Intent Resolution to assess agent performance comprehensively. These metrics evaluate how well an agent’s actions align with user intent and task requirements, ensuring that autonomous decisions contribute positively to business outcomes.

Similarly, Galileo emphasizes the importance of task completion metrics and error correlation analysis. By tracking how effectively agents achieve defined objectives and identifying patterns in errors, organizations can fine-tune agent behaviors and improve reliability. This approach ensures that agents not only perform tasks efficiently but also adapt to complex, real-world scenarios.

Compliance & Ethics

The power of agentic AI lies in its autonomy. But autonomy without oversight is a liability. When AI agents act across departments, tools, and systems—triggering actions, making decisions, and escalating edge cases—they introduce new risks that most compliance frameworks weren’t designed to catch. Ethical ambiguity arises not from malice, but from a lack of clarity: Who approved that decision? Was bias introduced upstream? Did the agent overstep its bounds or operate as designed?

The answer lies in governance by design. As Harvard Business Review notes, AI agents already deployed in enterprise workflows are surfacing new ethical questions, especially when decisions happen without a human in the loop. Meanwhile, regulators are beginning to scrutinize AI explainability, transparency, and fairness as essential business obligations, not just technical concerns. With legislation tightening globally, agentic AI can’t just be auditable; it must be intentionally constrained and context-aware.

Agentic AI isn’t just another tool in the stack; it’s a shift in how work happens, who does it, and what it means to contribute. Organizations that treat this as a technology project will fall behind. The ones that lead will treat it as an organizational redesign—with people, process, and purpose at the center. Because this isn’t about replacing workers. It’s about rewriting the rules of collaboration between human and non-human teammates.

If you’re rethinking how AI fits into your org chart—not just your infrastructure—FD Ryze offers a platform built to help teams operationalize agentic AI across systems, roles, and goals. Autonomous, orchestrated, and ready to plug into real work.