As AI deepfakes and identity fraud surge, agentic AI is stepping up as the financial sector’s best line of defense… if it’s governed right.

Over the past decade, fraud has shifted from the margins to the mainstream of financial risk, evolving into a more structured, repeatable, and resilient threat.

In 2023, criminals stole £1.17 billion through authorized and unauthorized payment fraud in the UK, with banks blocking an additional £1.25 billion through advanced security systems. Over 2.7 million fraud cases were recorded, and according to the UK Government’s 2024 Cyber Security Breaches Survey, 50% of UK businesses suffered a cyberattack or breach in the past year, a sharp rise from 39% in 2022.

Across the pond, the US Federal Trade Commission reported that consumers in the country lost over $12.5 billion to fraud in 2024, a 25% increase year over year. Investment scams alone accounted for $5.7 billion, followed by $2.95 billion lost to imposter scams. Bank transfers and cryptocurrency were the most exploited payment channels.

Fraud has evolved into a cross-border enterprise, with “fraud-as-a-service” toolkits enabling faster, cheaper, and more scalable attacks than ever before. Artificial intelligence, specifically, generative AI tools, are being used to automate social engineering, produce deepfakes, and build convincing synthetic identities at scale.

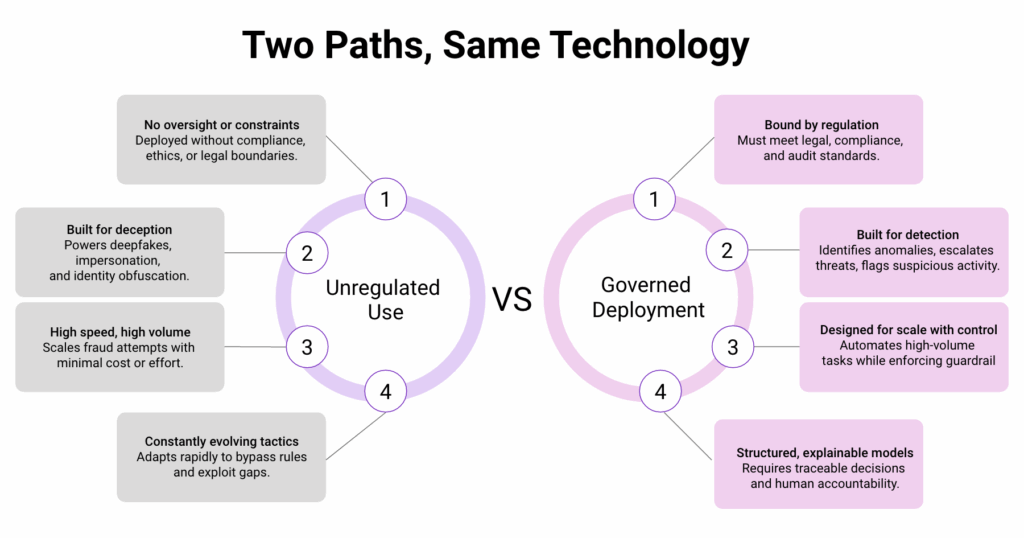

This is the new arms race: attacker AI vs. defender AI. Because while AI is transforming fraud, it’s also being positioned as the front line of defense. The challenge now is ensuring that the systems built to protect don’t outpace the safeguards designed to govern them.

The Attacker: Industrial-Scale Deception

The fraud landscape in 2025 is no longer defined by opportunistic actors. It is shaped by entire ecosystems. In the U.S. alone, identity fraud cost individuals $43 billion in 2023, according to AARP, a figure that underscores just how pervasive and personal these attacks have become. Stolen credentials, synthetic identities, and deepfake toolkits are now packaged and sold like commercial software.

Generative AI has supercharged this model. Deepfake fraud has already resulted in nearly $900 million in documented losses, according to recent industry analysis. Voice cloning has emerged as one of the most dangerous vectors, with multiple high-profile incidents involving CEO impersonation, fake family emergencies, and fraudulent bank verification calls. In one case, fraudsters used a cloned voice to trick the CEO of a company into transferring $243,000, and the real executive had no idea until it was too late.

These attacks are faster to execute, harder to detect, and cheaper to deploy. As the World Economic Forum warned in July 2025: AI tools are now so accessible and convincing that dangerous actors can fake virtually anything in real time.

With threat actors operating like lean startups and AI lowering the barrier to entry, the attacker’s side of the arms race is accelerating, with no sign of slowing down.

The Defender: AI as the Front Line

In response to this onslaught, financial institutions are rapidly deploying agentic AI—combining advanced detection, authentication, and response automation—to modernize their defenses at speed and scale.

Crucial progress is happening in real-time voice fraud detection. Synthetic-audio scams have skyrocketed: deepfake fraud attempts in Europe jumped by an estimated 2,137% over three years and over 40% of identity attacks across financial services are due to AI. Pindrop reports that their platform has processed over 3 million confirmed fraud events, likely preventing $2 billion in losses before any damage occurred.

On a broader scale, security teams are shifting from reactive investigations to behavioral and traffic-level analytics that look for systemic threats, spotting patterns syndicates use to test KYC and biometric systems. But even this shift is just catching up: detection capabilities consistently lag behind, with state-of-the-art systems experiencing a 45–50% drop in accuracy when faced with real-world deepfakes. And human detection fares no better, averaging just 55–60%, barely above chance.

Agentic AI is emerging as a vital layer in the financial sector defense stack, but its effectiveness depends as much on how it is governed as how it performs. The real gains lie in how institutions operationalize it, not just in how fast it reacts.

Agentic AI in Practice

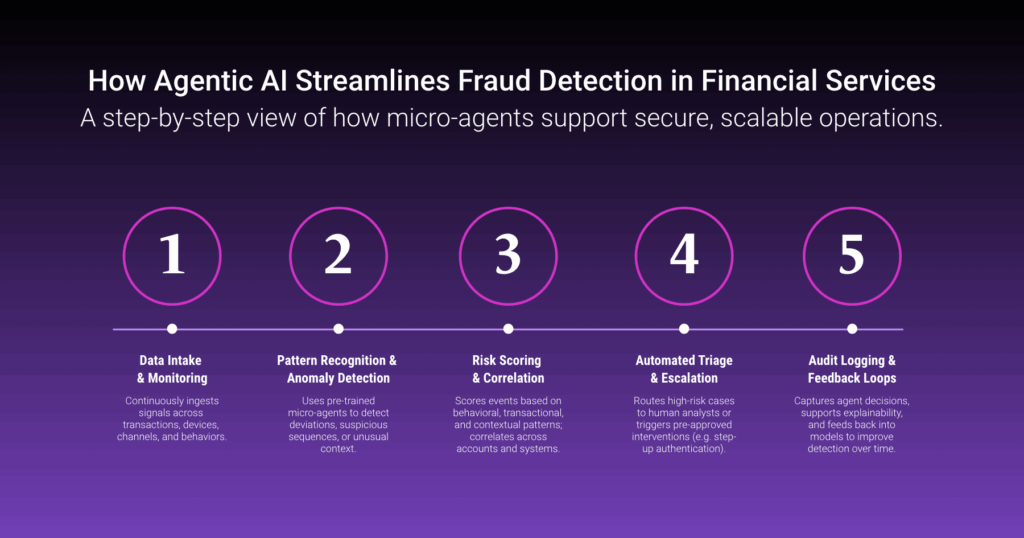

In today’s financial systems, agentic AI is already working behind the scenes: triaging fraud alerts, automating compliance checks, and flagging suspicious behavior across vast customer networks.

At firms like Apex Fintech Solutions, agentic models support real-time threat detection across high-volume environments, surfacing anomalies and routing them to human analysts for decision making. Platforms like Torq are automating phishing investigations, device fingerprinting, and endpoint remediation using pre-programmed “playbooks,” freeing up SOC teams to focus on high-risk cases.

Unlike generic AI models, which miss 35% of sophisticated fraud attempts, domain-specific agentic platforms such as Fulcrum Digital’s FD Ryze are purpose-built for the business logic, regulatory demands, and risk models of banking. They deploy multiple task-specific micro-agents to automate everything from real-time background checks to anomaly detection, ensuring regulatory compliance and early fraud alerts without disrupting customer experience.

When designed around sector-specific needs and governed with intention, agentic AI becomes more than a technical layer. It becomes a strategic pillar in how financial institutions manage risk, build resilience, and safeguard trust.

Governance & Regulation: Enabling AI at Scale

Fraudsters face no rules, no audits, and no regulators. Financial institutions do. And that’s exactly what makes AI harder, but also more valuable, on the defensive side of the arms race.

In finance, agentic AI can’t operate like a black box. Its decisions must be explainable to compliance teams, auditable by regulators, and defensible to customers and boards. That’s not just a legal requirement; it’s what makes AI usable in a high-trust environment.

Regulators are already moving to formalize these expectations. In the UK, the Financial Conduct Authority’s AI sandbox provides institutions space to test and tune agentic models under regulatory supervision. In the US, the SEC and DOJ have launched enforcement actions against “AI-washing,” penalizing companies that exaggerate AI capabilities or hide accountability behind automation.

Governance, in this context, isn’t a barrier. It is a framework for confidence. It gives financial institutions permission to scale agentic AI not by cutting corners, but by making its actions traceable, reversible, and aligned with institutional intent.

If you’re exploring how to scale agentic AI without compromising control, FD Ryze was built with that exact mandate. Let’s talk.