Generative AI creates new content—text, images, code, or data based on learned data patterns.

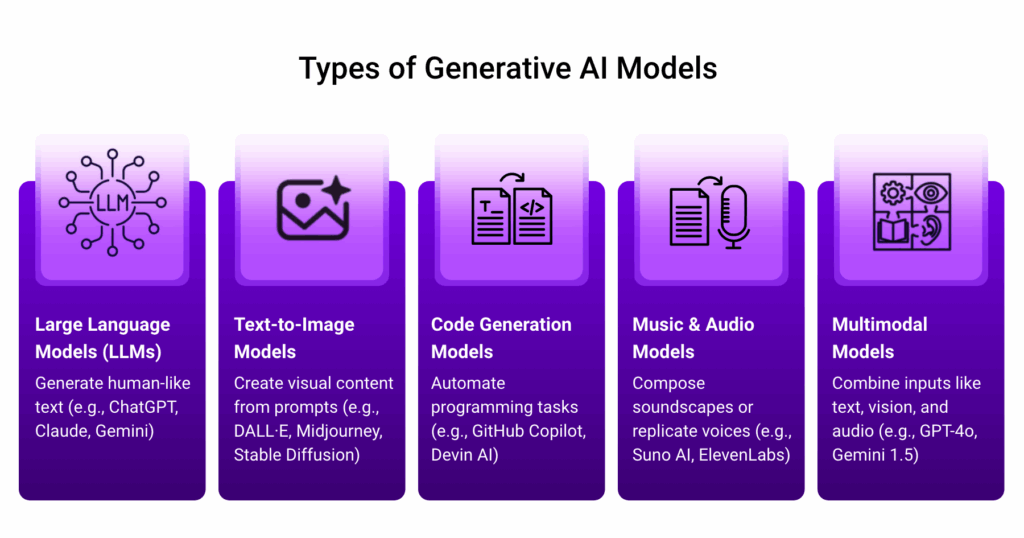

Generative AI refers to artificial intelligence models capable of creating new content, ideas, or outputs based on learned data patterns. Unlike traditional AI systems that classify or predict, generative models produce novel artifacts—text, images, audio, or even software code.

Detailed Definition & Explanation

At its core, Generative AI uses deep learning models such as transformers, diffusion models, and GANs (Generative Adversarial Networks) to learn patterns from large datasets and generate new, coherent outputs that mimic those patterns.

In the context of Agentic AI, generative models serve as cognitive engines for autonomous agents, helping them write code, generate summaries, simulate user behaviour, or design decision trees dynamically. These capabilities allow agents not just to act, but to create, adapt, and communicate with higher semantic precision.

Generative models typically follow a pretraining + fine-tuning paradigm. In LLMs, for example, transformers are trained on massive text corpora to predict the next token in a sequence. The same logic extends to diffusion models (which add and remove noise to create images) or GANs (where two networks—a generator and discriminator—compete to improve output quality).

In agentic systems, these models are:

- Embedded as tools (e.g., an LLM generating an email reply)

- Orchestrated by agents that select and feed context to them

- Prompt-engineered dynamically based on user or workflow goals

This allows agents to switch between reasoning, summarization, content creation, or even code execution on demand.FD Ryze integrates generative models into its agent framework to support tasks such as autonomous document summarization, dynamic email generation, and contextual policy simulations, allowing agents to create content with enterprise-grade accuracy.

Why It Matters

1. Enhances Agent Cognition

Generative models like LLMs and diffusion models allow agents to synthesize information, draft content, and simulate alternatives using transformer-based attention mechanisms and probabilistic generation. In an industry like finance, this enables agents to explain investment risk models to non-technical users. Similarly, in education, agents can simulate exam questions or learning journeys dynamically based on learner profiles.

2. Accelerates Product Development

By using prompt-tuned LLMs or code generation models, agents can instantly generate product descriptions, API documentation, or UX content. Few-shot learning enables agents to generate accurate drafts with minimal input. Within the ecommerce space, this streamlines product onboarding at scale. In insurance, agents can generate tailored onboarding scripts and claims flows without manual copywriting.

3. Unlocks Personalization at Scale

Agents embed user signals—behavioral data, segmentation, or session context—into dynamic prompts, allowing generative models to craft hyper-specific messages, offers, or interfaces. In the consumer products vertical, this enables 1:1 promotional messaging across channels. In higher ed, generative tutors can create tailored study guides based on past performance.

4. Facilitates Automation in Complex Tasks

Generative models replace rule-based logic in repetitive creative or semi-cognitive tasks. Agents generate draft reports, FAQs, or even backend code using models like GPT-4, Devin, or StarCoder. For instance, in legal services, agents summarize case files or generate compliance responses, while in IT, agents can auto-write test cases or scripts based on Jira tickets.

5. Transforms Enterprise Knowledge Management

Generative AI models parse unstructured data—PDFs, transcripts, call logs—and generate structured outputs such as summaries, checklists, or decision trees using multi-pass generation and retrieval-augmented generation (RAG). In BFSI, this allows agents to summarize customer interactions into CRM entries. In manufacturing, agents can convert maintenance logs into predictive alerts or SOP updates.

Real-World Examples

1. OpenAI – ChatGPT (GPT-4)

ChatGPT, powered by OpenAI’s GPT-4, is one of the most widely deployed generative AI models globally. It enables conversational agents, code assistants, creative writing tools, and enterprise copilots through its fine-tuned natural language generation and reasoning capabilities. GPT-4 is used across industries for drafting content, automating support, summarizing documents, and generating business insights.

2. Google DeepMind – Gemini 1.5

Gemini combines the strengths of large language models with multimodal reasoning, allowing it to process and generate text, images, and structured data within a single architecture. Gemini 1.5 is integrated across Google Workspace, powering intelligent document generation, slide creation, and email writing, all with contextual awareness and long-context memory capabilities.

3. Anthropic – Claude 3

Claude is designed with constitutional AI and enterprise safety at its core. Claude 3 models are used for internal knowledge base generation, contract analysis, and collaborative writing, particularly in legal, healthcare, and education sectors. With high performance in reasoning, summarization, and code generation, Claude supports agent-led workflows in sensitive environments.

What Lies Ahead

1. Autonomous Design & UX Agents

Generative AI will empower agents that prototype UIs, write design briefs, and generate UX flows on the fly. These agents will combine large language models with structured prompt chains and design libraries (e.g., Figma APIs, design systems) to generate interface components based on user intent or system requirements.

2. Synthetic Data Generation

Privacy-preserving synthetic data will become foundational for training and testing agent workflows across industries. Using generative adversarial networks (GANs) or diffusion models, agents will simulate realistic datasets that mimic edge cases, user behavior, or regulated environments without exposing sensitive records.

3. On-Device GenAI for Edge Agents

Compact LLMs and multimodal models will power agents that operate offline or on-device, especially in retail, logistics, and field services. Distilled or quantized versions of LLMs (e.g., TinyLlama, Phi-3) will enable local inference, allowing edge agents to generate, interpret, and respond without needing cloud calls.

4. AI-First Business Interfaces

Generative agents will redefine how users interact with systems—from conversational BI to natural-language ERP navigation. By integrating RAG pipelines and domain-specific LLMs with enterprise backends, agents will convert unstructured queries into SQL, API calls, or dashboards, streamlining user interaction through intent understanding.

5. Regulation and Model Guardrails

As GenAI systems scale, agents will need to operate within stricter governance layers, with policy insight agents flagging hallucinations, IP risks, or toxic outputs. These governance layers will combine prompt moderation, model output validation, and fine-tuned classifier agents to ensure generative content aligns with legal, ethical, and brand standards.

Related Terms

- Large Language Models (LLMs)

- Prompt Engineering

- Agent Tools

- Model Orchestration

- Autonomous Agents

- Generative Code

- Diffusion Models

- Synthetic Data

- Responsible AI

- Zero-Shot Learning