Cloud and infrastructure power scalable, agent-ready compute, storage, and orchestration layers

In the context of Agentic AI, cloud and infrastructure refer to the computational backbone—scalable storage, compute power, networking, and orchestration layers—that enables autonomous agents to operate across distributed enterprise environments. These systems provide the elasticity, responsiveness, and integration depth required for agents to act independently and collaborate in real time. Storage, in particular, plays a critical role in maintaining agent memory, workflow state, and decision context. The underlying cloud infrastructure architecture ensures these capabilities are reliably aligned to support real-time, distributed intelligence.

Detailed Definition & Explanation

Cloud and infrastructure form the core foundation on which Agentic AI systems operate, raising a key question for many organizations: how does cloud infrastructure work in the context of scalable, intelligent agent deployment? This includes everything from cloud computing infrastructure, such as platforms like AWS, Azure, and GCP, to networking and API gateways, GPU-accelerated clusters, storage orchestration, and containerized microservices (e.g., via Kubernetes). These systems—including virtualized infrastructure and broader cloud infrastructure—provide the runtime environment needed for deploying, coordinating, and managing autonomous agents across complex, distributed enterprises.

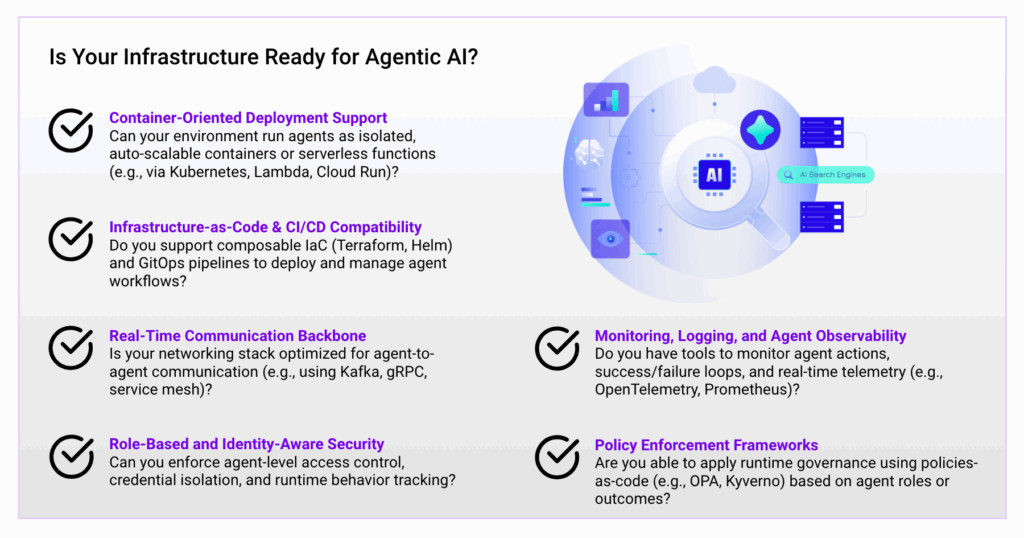

In Agentic AI, infrastructure must be more than elastic and scalable; it must be agent-aware. That means:

- Real-time provisioning and isolation for micro-agents

- Low-latency communication across agent clusters

- Role- and context-based access control to ensure robust network security in cloud infrastructure

- Telemetry streams for traceability and optimization

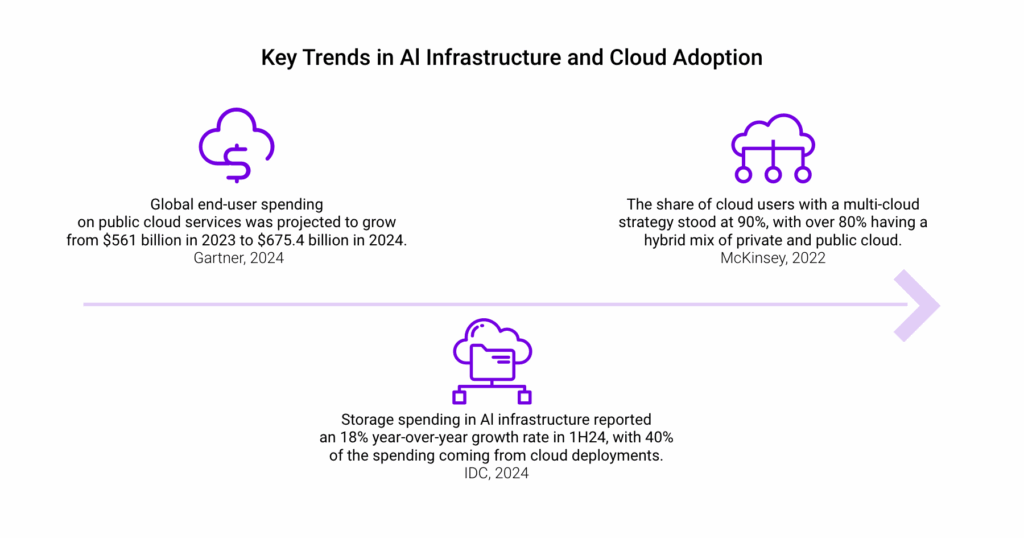

As enterprise adoption of AI scales, the importance of modern cloud infrastructure has intensified. According to IDC, storage spending in AI infrastructure reported an 18% year-over-year growth rate in 1H24, with 40% of the spending coming from cloud deployments. McKinsey found that the share of cloud users with a multi-cloud strategy stood at 90%, with over 80% having a hybrid mix of private and public cloud. Meanwhile, Gartner reported that the global end-user spending on public cloud services was projected to grow from $561 billion in 2023 to $675.4 billion in 2024.

These infrastructure shifts signal a broader transformation where the cloud isn’t just a platform for hosting applications, but a mission-critical enabler for autonomy at scale.

Why It Matters

Enables Scalable Agent Deployment: Enterprises may deploy hundreds or thousands of autonomous agents, each requiring isolated runtime, task context, and API access—demands that are met by scalable cloud infrastructure in cloud-native environments.

Supports Real-Time Multi-Agent Workflows: Infrastructure with low latency and high IOPS allows agents to interact, hand off tasks, and reason in parallel across enterprise systems.

Powers AI Compute Requirements: Agents often require access to LLMs, vector databases, and reinforcement learning loops, all of which are key components of cloud infrastructure that depend on robust GPU/TPU-backed systems.

Maintains Governance and Compliance: Enterprise infrastructure must enforce agent-level security policies, audit trails, identity management, and failover logic to meet regulatory standards.

Forms the Bedrock for Agentic Ecosystems: The role of cloud infrastructure is foundational. It enables agentic systems to be deployed, scaled, and trusted in production environments.

Real-World Examples

Anthropic Claude + Bedrock (AWS)

Anthropic’s Claude runs on AWS Bedrock, using secure, enterprise-grade infrastructure —demonstrating how cloud infrastructure services for enterprises can support LLM-based agents in real time. Its agentic interaction model depends on high-availability cloud endpoints, policy enforcement layers, and fine-tuned latency optimization.

FD Ryze

FD Ryze runs on a hybrid cloud infrastructure that supports real-time agent orchestration and policy-driven deployment, demonstrating how cloud infrastructure solutions can enable scalable, cross-functional agent ecosystems.

Google Vertex AI + Agent Builder

Vertex AI integrates with Google’s infrastructure to power LLM orchestration and agentic pipelines. With integrated MLOps, feature stores, and container-based scaling, Vertex enables organizations to run goal-driven agents reliably in production.

What Lies Ahead

Infrastructure-Native Agent Deployment Models

Agentic AI will move toward infrastructure-native deployment, where agents are treated like services and run on modular cloud infrastructure components such as containers, serverless functions, or sidecars with auto-scaling and isolation.

Autonomous Infrastructure Management by Agents

Agents will begin to provision, configure, and develop cloud infrastructure and optimize it autonomously, making infrastructure itself partially self-healing and self-scaling.

AI-Aware Networking and Data Routing

Agentic systems will demand intelligent traffic routing, low-latency mesh communication, and priority-based task queuing across hybrid environments.

Agent-Aware Policy and Security Layers

Security frameworks will evolve to recognize agent identity, roles, behavior patterns, and enforce real-time access control or escalation policies.

Composable Infrastructure-as-Code for Agentic Workflows

Just like microservices, agentic workflows will be defined via modular infrastructure-as-code blueprints that can be versioned, tested, and reused.

Related Terms

- Agentic AI

- AI Infrastructure

- Microservices

- Multi-Agent Systems

- Hybrid Cloud

- Kubernetes

- AI Ops

- Edge AI

- Container Orchestration

- Data Pipelines