Artificial intelligence model assessment is essential to validate ethical and robust AI models.

Artificial intelligence model assessment is the process of systematically evaluating AI and machine learning models to ensure their performance, accuracy, fairness, and regulatory compliance. This includes technical metrics, ethical alignment, and domain-specific considerations. Whether for internal audits or external certification, a robust AI model assessment helps enterprises build trustworthy, reliable, and scalable intelligent systems.

Detailed Definition & Explanation

As AI systems take on increasingly complex, autonomous tasks, artificial intelligence (AI) model assessment becomes a mission-critical function. It refers to the end-to-end process of evaluating a model’s functionality, performance under stress, explainability, and compliance with ethical and regulatory standards. This process includes both AI model evaluation (measuring accuracy, bias, and generalizability) and AI model validation (ensuring fitness for intended use).

Modern AI/ML assessment services go beyond precision metrics to test AI model robustness, interpretability, and real-world behavior. Assessments typically include:

- Performance metrics (precision, recall, F1, etc.)

- Bias and fairness testing

- Stress and edge-case testing

- Explainability/interpretability audits

- Ethical AI assessment frameworks

This is particularly important for Generative AI model assessment services, where outputs are non-deterministic and impact-sensitive. Increasingly, enterprises rely on cloud-based AI model assessment services to evaluate models across environments and use cases.

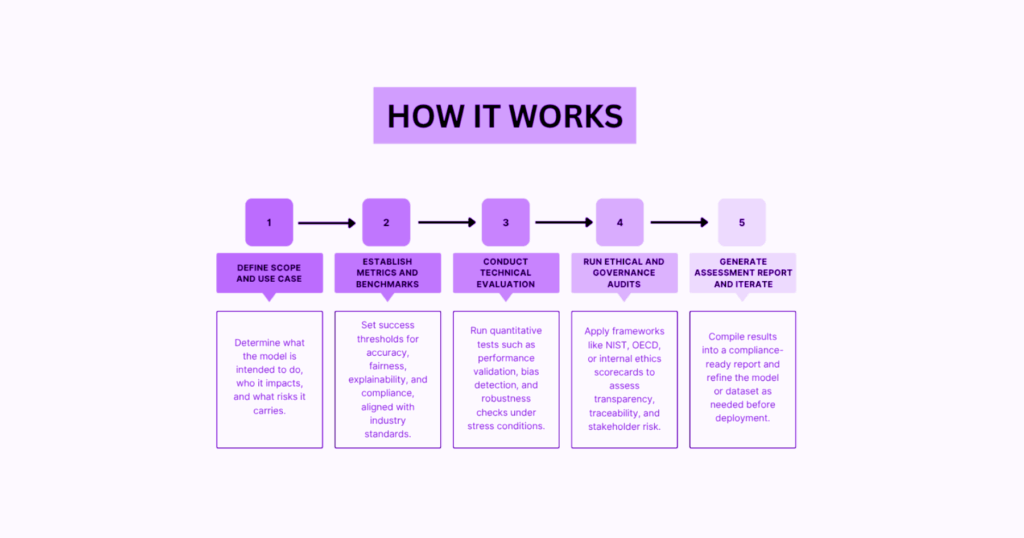

How AI Model Assessment Works

The AI model assessment process typically unfolds across five key stages, each of which helps organizations systematically evaluate technical performance, ethical risks, and regulatory alignment.

Why It Matters

1. Supports Compliance in Regulated Sectors

For industries like financial services and insurance, where decisions made by AI carry legal or financial impact, model assessment is essential to meet audit and governance mandates. A clear AI validation roadmap prevents costly compliance lapses.

2. Protects Customer Experience and Brand Trust

In consumer products and services and eCommerce, faulty or biased AI can erode user confidence. Regular AI reliability assessment ensures that models are accurate, consistent, and safe across demographics and contexts.

3. Improves Responsible AI Maturity

Higher education institutions and public sector projects increasingly rely on AI in admissions, research, and student services. Here, a structured AI algorithm evaluation process supports transparency, accountability, and academic integrity.

4. Optimizes Model Performance at Scale

Assessment ensures that models deployed in high-volume or high-frequency environments (like trading, fraud detection, or dynamic pricing) maintain performance under changing data conditions, crucial in the finance and consumer products and services industries.

5. Enables Scalable Governance

Whether through manual review or automated pipelines, AI model governance is strengthened when models are assessed at every stage, from sandbox to deployment. This supports cross-functional collaboration between tech, risk, and compliance teams.

Real-World Examples

AI model oversight is no longer a future consideration; it’s a current enterprise priority. As a result, organizations across industries are investing in structured, repeatable model assessments to balance performance with responsibility.

Here are three real-world examples:

Google’s PAIR Initiative (People + AI Research)

Focuses on designing tools for interpretability and fairness, helping teams conduct transparent AI model evaluation across various applications.

JPMorgan Chase AI Validation Team

Built a dedicated internal unit to perform commercial AI model validation consulting, particularly around trading algorithms, loan risk models, and fraud detection.

IBM Watson OpenScale

Offers cloud-based AI model assessment services with real-time drift detection, bias alerts, and governance dashboards for enterprise clients.

What Lies Ahead

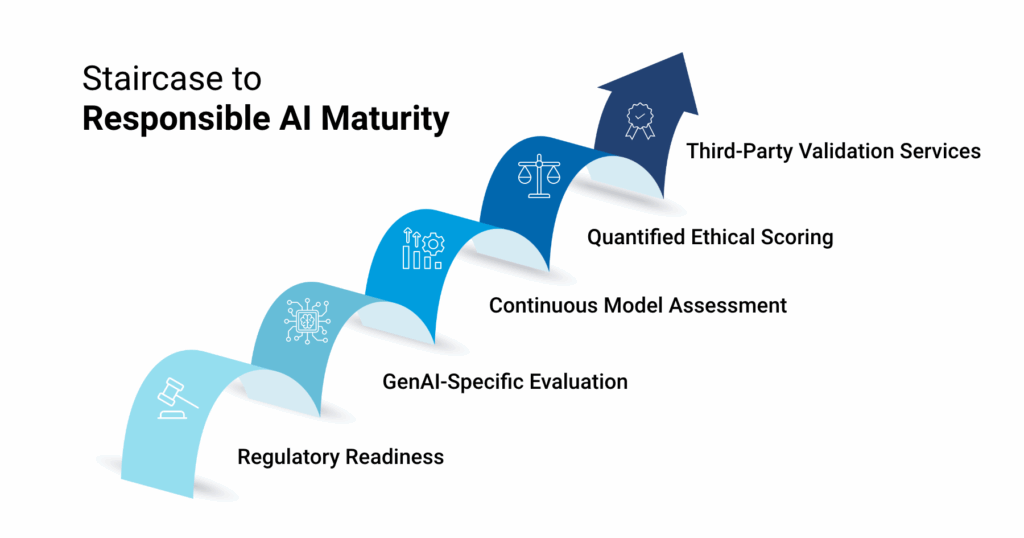

1. Model Assessment Will Become Mandatory

As regulations tighten, model validation will shift from optional to required. Enterprises in finance and insurance should begin developing formalized AI validation roadmaps now to stay ahead of compliance mandates.

2. Generative AI Will Require New Testing Paradigms

Current model evaluation frameworks are ill-equipped for generative systems. Expect new tools and metrics specifically for Generative AI model assessment services, particularly in the eCommerce and consumer product domains where hallucination risks affect UX and safety.

3. Continuous Assessment Will Replace One-Time Checks

In sectors like higher education and public sector services, where models evolve continuously, assessments will be automated and ongoing, embedded within CI/CD and MLOps pipelines.

4. Ethical AI Will Be Quantified

As responsible AI assessment matures, expect scorecards that evaluate fairness, transparency, and user impact. Platforms like FD Ryze are already enabling this by embedding explainability and risk evaluation within model development pipelines, especially critical in regulated sectors like financial services and higher education.

5. Assessment-as-a-Service Will Expand

Enterprises will increasingly turn to third-party AI/ML assessment services and commercial validation consulting firms to ensure model quality across hybrid or federated environments, especially in global, cloud-first ecosystems.

Related Terms

- AI Validation Roadmap

- AI Governance

- AI Explainability

- Model Drift Detection

- ML Model Testing

- AI Ethics and Audit