An FAQ on Agent-Initiated Transactions, Risk Evolution, and Real-Time Infrastructure

As AI agents become more capable of acting on our behalf, the financial services industry is facing a subtle but meaningful shift. In May 2025, Visa enabled agent-initiated payments through its Intelligent Commerce platform, a signal that software, not just people, will soon be transacting independently. But this isn’t about shopping bots.

For CTOs, compliance leaders, and digital transformation teams across banking and fintech, the question is no longer “When will this happen?” but “How do we prepare?”

This FAQ addresses the real questions financial institutions should be asking—from risk and readiness to trust, support, and infrastructure—as agent-initiated payments move from theory to implementation.

Q1. What exactly has Visa announced and why does it matter?

In May 2025, Visa launched its Intelligent Commerce platform, an infrastructure update that allows AI agents to securely initiate and complete transactions on behalf of users. The move positions Visa as the first major card network to formally recognize non-human actors as part of the payment ecosystem.

While the term “agent-initiated payment” may sound technical, its implications are simple but profound: software can now act on user instructions to spend, not just suggest. This changes how financial platforms authenticate, authorize, and track transactions.

More importantly, it reflects a broader shift in digital business, where human decision-making is increasingly abstracted into software logic.

Q2. What does agent-initiated payment actually mean in practice?

It means an autonomous AI—such as a personal assistant, procurement bot, or embedded financial tool—can now trigger and complete a transaction without direct user input at the moment of purchase. The user defines rules upfront (e.g., “Refill my cloud credits weekly” or “Buy tickets if price drops below $200”), and the agent acts accordingly.

This isn’t theoretical. Startups like Mindverse and established players like OpenAI (Operator) are already building agents capable of planning and executing multi-step tasks. Visa’s move gives them the ability to plug into payments, making the leap from recommendation to execution.

Q3. Does this introduce new fraud or compliance risks?

It introduces new contexts, not necessarily more risk. But those contexts challenge how fraud and compliance frameworks currently operate.

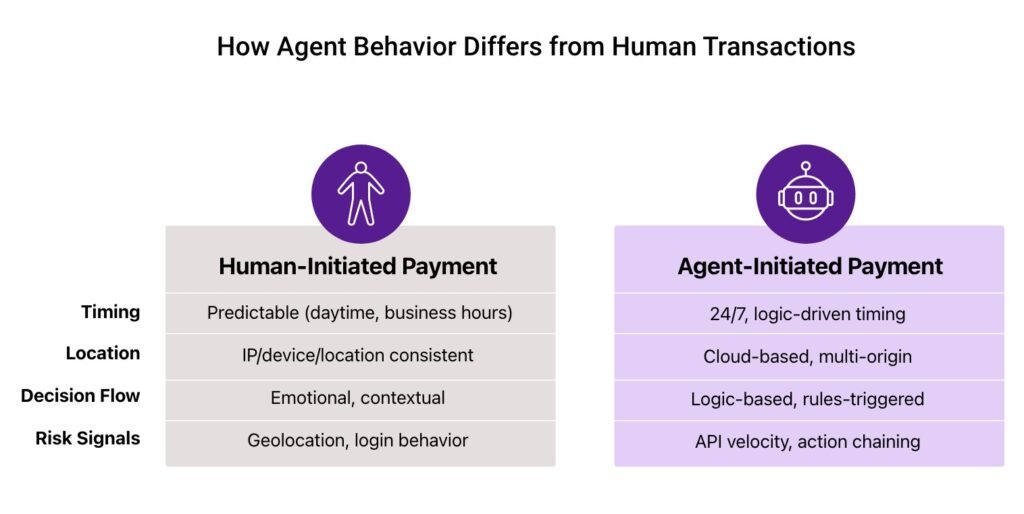

On the fraud side: Most systems today are optimized to catch anomalies in human behavior: unusual login locations, device switches, or transaction timing. But AI agents don’t behave like humans. They may act at machine speed, operate across distributed cloud environments, or make decisions that follow a logical path but appear erratic to current models.

This means risk engines must evolve from anomaly detection to behavior interpretation, factoring in agent patterns, task logic, and frequency signals instead of just flagging what looks “unusual.”

On the compliance side: Agent-initiated payments raise questions around KYC, consent, and authorization trail integrity. If an agent makes a purchase, who is the transacting party? What does consent look like when it’s delegated? And how do regulators audit an AI’s decision chain?

The answer isn’t to block agents. It’s to build transparent delegation frameworks and update audit readiness processes. Smart fintechs will treat this as a chance to rethink consent infrastructure, design agent permission logs, and offer programmable spending policies as a value-added service.

Q4. What steps should financial institutions take now to prepare for agent-initiated payments?

Agent-initiated payments aren’t just a technical update; they signal a deeper need for infrastructure that can support machine-led transactions with speed, security, and clarity.

Financial institutions looking to stay ahead should start with a few key priorities:

- Build agent-aware APIs: Accept agent identity signals, scopes, and permissions as part of your payment authorization flow.

- Update behavioral risk models: Include metadata like agent intent, logic paths, and request velocity.

- Design programmable controls: Offer users ways to define transaction rules, limits, and override options for their agents.

- Invest in real-time infrastructure: Legacy fraud checks that run in batches may miss agent-based behavior altogether.

This is the evolution of consulting and services in the digital finance space: building platforms that can serve not just humans, but human-delegated software.

Q5. How do we certify or verify third-party agents accessing our systems?

As agent-initiated payments become more common, financial institutions will increasingly face requests from third-party AI agents, not just those built in-house or by partners. That raises a new challenge: how do you know which agents to trust?

Traditional user authentication methods don’t apply. Instead, institutions will need to establish agent-specific onboarding and verification layers, including:

- Agent identity validation: Capturing metadata such as the agent’s origin, model type, and delegation scope.

- Certification or registration frameworks: Especially for agents operating across sensitive workflows (e.g., finance, procurement, compliance).

- Granular permissioning protocols: Similar to OAuth scopes, defining what an agent is allowed to do and under what conditions.

This isn’t just a security layer; it’s part of building a trust framework for software actors, which could become a new standard in consulting and services for financial infrastructure.

Q6. Who’s responsible if something goes wrong: the user, the agent, or the platform?

Legally, the lines are still being drawn. As of now, most liability rests with the end-user who authorizes the agent. But as agents become more autonomous and decision-capable, that model becomes less defensible, legally and reputationally.

What happens if an agent makes a high-risk purchase based on ambiguous logic? Or if its decisioning was influenced by adversarial inputs? The blame can no longer fall entirely on the user.

This creates new expectations for financial institutions to build:

- Permissioning frameworks that define what agents are allowed to do

- Delegation logs that trace who (or what) made the call and why

- Fail-safes and overrides for when agent behavior deviates from norms

Regulators may eventually push for explainability standards and shared responsibility models. Fintechs that preempt this by offering transparent, agent-aware trust layers won’t just mitigate risk, they’ll differentiate themselves.

Q7. Is agent-initiated payment just a feature or part of a bigger shift in how industries operate?

It’s part of a broader transformation. Agent-initiated payment is one entry point into a larger move toward agentic systems, where software makes decisions and takes action across industries, not just in finance.

We’re already seeing this shift play out:

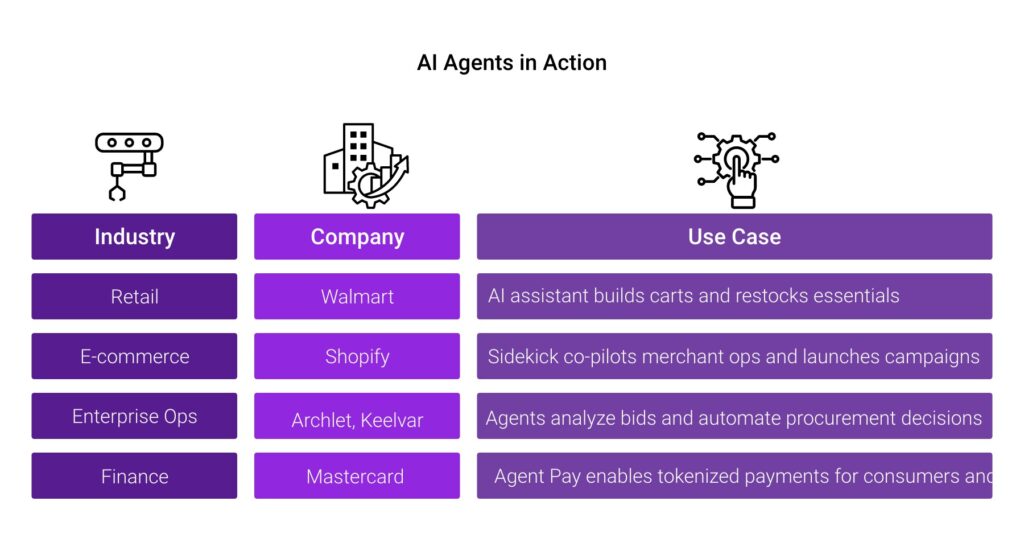

- Retail: Walmart’s AI-powered shopping assistant helps customers build carts, restock essentials, and plan purchases conversationally. It doesn’t just recommend — it executes. This streamlines decision fatigue and integrates seamlessly with loyalty programs and real-time inventory.

- E-commerce: Shopify’s Sidekick acts as a co-pilot for merchants, handling queries like “show me my best-selling products last month” or “launch a 20% discount campaign.” It’s redefining operations by allowing non-technical users to delegate tasks end-to-end, from data analysis to execution.

- Enterprise operations: AI agents are already being used in procurement and supply chain workflows. Companies like Archlet and Keelvar use intelligent agents to analyze supplier bids and trigger recommendations or reorders, saving time and reducing compliance risks.

In financial services as well, Mastercard recently launched Agent Pay, a platform that allows AI agents to make secure payments on behalf of users. It extends tokenization to agent-led transactions and supports both consumer and B2B use cases, from automated shopping to sourcing and payment optimization. The company is also partnering with Microsoft to scale agentic commerce securely.

But payments are just the beginning. Agentic AI is poised to manage everything from budget enforcement to credit utilization and even real-time reconciliation. Institutions that prepare now will become the go-to platforms for a new generation of autonomous decision-makers.

Q8. How will this affect customer experience and support operations?

Agent-initiated payments may simplify user task. But they also introduce new complexity behind the scenes, especially when transactions happen without direct user involvement.

Customers might not always expect or understand a purchase made by their AI assistant. That means support teams need to be ready to handle:

- Questions like “Why did my agent buy this?”

- Disputes over authorized vs. delegated actions

- Requests to pause, reverse, or update agent behavior

To stay ahead, institutions should:

- Build explainable transaction logs that clarify when, how, and why an agent acted

- Offer agent management dashboards within customer portals

- Train CX teams to handle AI-related queries, much like how chatbot escalations are handled today

This is part of reimagining the customer journey when your customer isn’t always the one clicking “Buy.”

Final Thoughts

The shift toward agent-driven transactions isn’t waiting for full regulatory clarity or industry consensus. It’s already underway. For fintech leaders, the real opportunity lies in building systems that aren’t just compliant, but confidently interoperable with the next generation of digital decision-makers. Treat agents not as edge cases, but as early indicators of where your infrastructure needs to go.

Platforms like FD Ryze are already built with this future in mind: modular, agent-ready, and designed to help you evolve before the market demands it. Have you had a chance to see how FD Ryze agents are powering agent-native financial workflows and intelligent automation? Book a demo today and see it in action.